"Quantifying the Unquantifiable" in Aerospace Electronics and Ergonomics Engineering: Review

Abstract

The today's efforts of aerospace system engineers, not to mention human psychologists, to assure adequate operational reliability of electronic-and-photonic (E&P) products and satisfactory success-and-safety of a mission or of an extraordinary situation, are, as a rule, based on more or less trustworthy statistics and on what is known as best practices. These efforts are typically unquantifiable, i.e. do not end up with numerical data that enable comparing different possible scenarios of the outcome of a planned undertaking. The objective of this review is to show, using examples from different and sometimes even unconnected areas of aerospace E&P and ergonomics engineering, how probabilistic predictive modeling (PPM) geared to a carefully designed, thoroughly conducted and adequately interpreted highly-focused and highly cost effective failure-oriented accelerated testing (FOAT) can be employed to quantify what is typically considered as "unquantifiable", i.e., to evaluate the lifetime and the corresponding probability of failure (PoF) of an aerospace E&P system, and/or the role of the human factor (HF), and to predict the outcome of a human-in-the-loop (HITL) related mission or an extraordinary situation, when equipment's reliability (both hard- and software) and human performance contribute jointly to the never-zero PoF of a mission or an extraordinary situation. The reader is not expected to necessarily "connect the dots", associated with different situations and examples provided. The only, but an important, feature that these examples have in common is that many aerospace system and ergonomics engineering related tasks and problems, which are perceived and treated today as unquantifiable, could and, in the author's opinion, should be quantified to assure safe and successful outcome of a particular aerospace undertaking of importance.

Acronyms

BAZ: Boltzmann-Arrhenius-Zhurkov (model); BIT: Burn-in testing; COV: Coefficient of Variation; DEPDF: Double Exponential Probability Distribution Function; EVD: Extreme Value Distribution; FDR: Flight Data Record; FOAT: Failure Oriented Accelerated Testing; E&P: Electronic and Photonic; GWB: George Washington Bridge; HALT: Highly Accelerated Life Testing; HCF: Human Capacity Factor; HF: Human Factor; HITL: Human-in-the-Loop; KCAS: Knots Calibrated Air Speed; LGA: La Guardia Airport; MWL: Mental Workload; NTCB: National Transportation Safety Board; PDfR: Probabilistic Design for Reliability; PM: Predictive Modeling; PoF: Probability of Failure; PPM: Probabilistic Predictive Modeling; PRM: Probabilistic Risk Management; RAT: Ram Air Turbine; QT: Qualification Testing; SF: Safety Factor; SM: Safety Margin; TRACON: Terminal Radar Approach Control; TTF: Time to Failure

Introduction

The current efforts led by system engineers to improve reliability of E&P materials, devices, packages and systems, and by human psychologists - in various safety-oriented documentations and activities, are, as a rule, qualitative. E&P systems reliability assurances, even when high operational reliability is crucial, are based on best practices and highly-accelerated-life-testing (HALT) [1,2] - a "black box" that might improve, but does not quantify reliability. The today's efforts of making missions and hazardous situations, including those when the HF is critical, successful and safe, employ, as rule, various non-quantified considerations and speculations. The majority of these efforts are of the mental workload (MWL) or situation awareness type [3-11]. In this review based primarily on the author's recent publications it is shown how analytical PPM geared to a highly focused FOAT (based on the anticipated bottlenecks of the system reliability and human performance) can be effectively employed to "quantify unquantifiable" - predict the PoF and the corresponding lifetime of an E&P product and/or a mission or an off-normal situation, when the role of the HF is critical [12], or when the reliability of the equipment (both hard- and software) and the human performance contribute jointly to the never-zero PoF of the considered effort. Accordingly, three major areas are distinguished and addressed with an objective to quantify what lends itself to more or less convincing quantification: 1) The aerospace E&P reliability [13-109]; 2) The role of the HF in some more or less typical aerospace situations [110-126]; 3) Some HITL related missions and situations, when the instrumentation's reliability and human performance contribute jointly to the outcome of such missions and situations [127-144]. The analyses use analytical modeling and applied probability theory [145-150]. It is briefly indicated also [151-154] how some of the developed models could be used to quantify the outcomes of processes or events even beyond the above areas, such as, e.g., survivability of species in different habitats [154]. The author would like to emphasize again that the "dots" associated with the addressed examples may or may not lend themselves to simple "connection". It is his intent and hope that an aerospace system or ergonomics engineer, after reading the abstract and the introduction, will go through the review and the cited references and will select the analyses pertinent to his/hers area of professional interest.

Review

E&P reliability

Today's practices

some problems envisioned and questions asked: Here are some problems envisioned and questions asked in connection with the today practices in the aerospace system and ergonomics engineering:

• E&P products that underwent HALT [1,2,20,22,54,56], passed the existing qualification tests (QT) and survived burn-in testing (BIT) [94,98,103] (see Table 1 below) often exhibit nonetheless premature field failures. Are these methodologies and practices, and particularly the accelerated test procedures, adequate [26]?

• Do electronic industries need new approaches to qualify their products, and if they do, what should be done differently [45,76]?

• Could the existing practices be improved to an extent that if the product passed the reliability tests, there is a way to assure that it will satisfactorily perform in the field?

• In many applications, such as, e.g., aerospace, military, long-haul communications, medical, etc., high reliability of E&P materials and products is particularly imperative. Could the operational (field) reliability of an electronic product be assured, if it is not predicted, i.e., not quantified [41]?

• And if such quantification is found to be necessary, could that be done on the deterministic, i.e. on a non-probabilistic basis [24,35,40]?

• Should electronic product manufacturers keep shooting for an unpredictable and, perhaps, unachievable very long, such as, e.g., twenty years or so, product lifetime or, considering that every five years a new generation of devices appear on the market and that such long time predictions are quite shaky, to say the least, should the manufacturers settle for a shorter, but well substantiated, predictable and assured lifetime, with an adequate, although never-zero, probability of failure?

• And how such a lifetime should be related to the acceptable (adequate and, if appropriate, even specified) probability of non-failure for a particular product and application?

• Considering that the principle of superposition does not work in reliability engineering, how to establish the list of the crucial accelerated tests, the adequate, i.e., physically meaningful, stressors and their combinations and levels?

• The best engineering product is, as is known, the best compromise between the requirements for its reliability, measurable cost effectiveness and short-as-possible time-to-market [47]; it goes without saying that, in order to make optimization possible, the reliability of such product should also be quantified, but how to do that?

• Bathtub curve [61], the experimental "reliability passport" of a mass-fabricated product, reflects the inputs of two critical irreversible processes - the statistics-of-failure process that results in a reduced failure rate with time (this is particularly evident from the infant mortality portion of the curve) and physics-of-failure (aging, degradation) process that leads to an increased failure rate with time (this trend is explicitly exhibited by the wear out portion of the bathtub diagram). Could these two critical processed be separated [52]? The need for that is due to the obvious incentive to minimize the role and the rate of aging, and this incentive is especially significant for products like lasers, solder joint interconnections and others, which are characterized by long wear out portions and when it is economically infeasible to restrict the product's lifetime to the steady-state situation, when the two irreversible processes in question compensate each other [77].

• A related question has to do with the fact that real rime degradation is a very slow process. Could physically meaningful and cost-effective methodologies for measuring and predicting the degradation (aging) rates and consequences be developed?

In the review that follow some of the above problems are addressed with an objective to show how the recently suggested PDfR concept [25,36,46,48,53,62-65,67,68,70,73,86,88,96] can be effectively employed for making a viable electronic device into a reliable and marketable product.

Accelerated testing

Shortening of electronic product's design and development time does not allow in the today's industrial environment for time consuming reliability investigations. To get maximum reliability information in minimum time and at minimum cost is the major goal of an electronic product manufacturer. On the other hand, it is impractical to wait for failures, when the lifetime of a typical today's electronic product is hundreds of thousands of hours, regardless of whether it could or could not predicted with sufficient accuracy. Accelerated testing is therefore both a must and a powerful means in E&P manufacturing. Different types of such testing are shown and their features are briefly indicated in Table 1.

A typical example of product development testing is shear-off testing conducted when there is a need to determine the most feasible bonding material and its thickness, and/or to assess its bonding strength and/or evaluate the shear modulus of this material. HALT is currently widely employed, in different modifications, with an intent to determine the product's reliability weaknesses, assess reliability limits, ruggedize the product by applying elevated stresses (not necessarily mechanical and not necessarily limited to the anticipated field stresses) that could cause field failures, and to provide large (although, actually, unknown) safety margins over expected in-use conditions. HALT often involves step-wise stressing, rapid thermal transitions, and other means that enable one to carry out testing in a time- and cost- effective fashion. HALT is sometimes referred to as a "discovery" test. It is not a qualification test though, i.e. not a "pass/fail" test. It is the qualification testing that is the major means for making a viable electronic device into a reliable marketable product. While many HALT aspects are different for different manufacturers and often kept as proprietary information, qualification tests and standards are the same for the given industry and product type. Qualification tests (QTs) are the major means to make a viable device into a marketable product. Burn-in testing (BIT) is a post-manufacturing testing. Mass fabrication, no matter how good the design concepts and/or the fabrication technologies are, generates, in addition to desirable-and-robust ("strong") products, also some undesirable-and-unreliable ("weak") devices ("freaks"), which, if shipped to the customer, will most likely fail in the field. BIT is supposed to detect and to eliminate such "freaks". As a result, the final bathtub curve (BTC) of a product that underwent burn-in does not supposedly contain its infant mortality portion. In the today's practice burn-ins, destructive tests for the "freaks" and non-destructive ones for the "healthy" products, are often conducted within the framework of, and concurrently with, HALT.

But are the today's practices based on the above HALT adequate? The funny, but quite practical, definition of a sufficiently robust electronic product is that, as some reliability managers put it, "reliability it is when the customer comes back, not the product". It is well known, however, that E&P products that underwent HALT, passed the existing QTs and survived burn-ins often exhibit premature operational failures. Are the existing practices adequate? Many reliability engineers think that one crucial shortcoming of the today's reliability assurance practices is that they are not based on understanding the underlying the reliability physics for the particular product, its time in operation and operation conditions. But how could one understand the physics-of-failure without running, certainly highly-focused and highly-cost-effective FOAT? It is clear also that if such testing is considered, it should be geared to a particular adequate, simple, easy-to-use and physically meaningful predictive model. Predictive modeling has proven to be a highly useful means for understanding the physics of failure and designing the most practical accelerated tests in E&P engineering. It has been recently suggested that FOAT should be considered as the experimental basis of the new fruitful, flexible and physically meaningful approach - probabilistic design for reliability (PDfR) of E&P products. This approach is based on the following ten major requirements ("commandments") reflecting the rationale behind the PDfR concept.

PDfR and its "ten commandments"

The PDfR concept is an effective means for improving the state-of-the-art in the E&P reliability field by quantifying, on the probabilistic basis, the operational reliability of a material or a product by predicting the probability of its inevitable failure under the given loading conditions and after the given service time, and to use this probability as a suitable and physically meaningful criterion of the product's expected performance. The following ten major governing principles ("commandments") reflect the rationale behind the PDfR concept:

1) When reliability is imperative, ability to predict it is a must; reliability cannot be assured, if it is not quantified;

2) Such a quantification should be done on the probabilistic basis; nothing is perfect; the difference between a highly reliable and an insufficiently reliable product is "merely" in the level of their never-zero probability of failure;

3) Reliability evaluations cannot be delayed until the product is made and should start at the design stage; it should be taken care of, however, at all the significant stages of the product's life: at the design stage, when reliability is conceived; at the accelerated testing stage; at the production/manufacturing stage; and, if necessary and appropriate, should be maintained in the field during the product's operation;

4) Product's reliability cannot be low, but need not be higher than necessary either: it has to be adequate for the given product and application, considering its lifetime, environmental conditions and consequences of failure;

5) The best product is the best compromise between the requirements for its reliability, cost effectiveness and time-to-market; obviously, such a compromise cannot be achieved if reliability is not quantified;

6) One cannot design a product with quantified, assured and optimized reliability by limiting the effort to the HALT; understanding the underlying physics of failure is crucial, and therefore highly cost-effective and highly focused FOAT should be considered and conducted as a possible and natural extension of HALT;

7) FOAT, unlike HALT, is a "white/transparent box" aimed at understanding the physics of failure and to quantify the E&P product's reliability, and should be geared to a small number of pre-determined simple, easy-to-use and physically meaningful predictive reliability models (constitutive equations) and should be viewed as the experimental basis and an important constituent part of the PDfR effort;

8) Physically meaningful, easy-to-use and flexible multi-parametric Boltzmann-Arrhenius-Zhurkov (BAZ) equation can be used as a suitable model for the assessment of the lifetime and the corresponding probability-of-failure of an E&P product;

9) Predictive modeling, not limited to FOAT model(s), is a powerful means to carry out, if necessary, various sensitivity analyses (SA) aimed at quantification and optimization of the E&P product reliability;

10) Consideration of the role of the human factor is highly desirable in the PDfR effort: not only "nothing", but also "nobody" is perfect, and ability to consider and possible quantify of the role of the human factor (HF) in assessing the likelihood of the adequate performance of a product, is often critical.

FOAT ("transparent box") could be viewed as an extension of HALT ("black box")

A highly focused and highly cost effective FOAT is the experimental foundation and the "heart" of the PDfR concept. FOAT [148,56,58,62,84,92,93] should be conducted in addition to and, in some cases, even instead of HALT, especially for new products, whose operational reliability is unclear and for which no experience is accumulated and no best practices nor HALT methodologies are not yet developed. Predictions, based on the FOAT and the subsequent PPM might not be perfect, at least at the beginning, but it is still better to pursue this effort rather than to turn a blind eye on the fact that there is always a non-zero probability of the product's failure.

Understanding the underlying reliability physics for the product performance is critical. If one sets out to understand the physics of failure in an attempt to create, in accordance with the "principle of practical confidence", a failure-free product, then conducting a FOAT type of an experiment is imperative. FOAT's objective is to confirm the usage of a particular more or less well established predictive reliability model, to confirm (say, after HALT is conducted) the physics of failure, and establish the numerical characteristics (activation energy, time constant, sensitivity factors, etc.) of the particular FOAT model of interest.

FOAT could be viewed as an extension of HALT. While HALT is a "black box", i.e., a methodology which can be perceived in terms of its inputs and outputs without a clear knowledge of the underlying physics and the likelihood of failure, FOAT, on the other hand, is a "transparent box", whose main objective is to confirm the use of a particular reliability model that reflects a specific anticipated failure mode and is aimed at quantifying the probability of failure. The FOAT based approach could be viewed as a quantified and reliability physics oriented HALT.

The FOAT approach should be geared to a particular technology and application, with consideration of the most likely stressors. The major assumption is, of course, that the FOAT model should be valid in both accelerated testing and in actual operation conditions. While HALT does not measure (does not quantify) reliability, FOAT does. HALT can be used therefore for "rough tuning" of product's reliability, and FOAT could and should be employed when "fine tuning" is needed, i.e., when there is a need to quantify, assure and even specify the operational reliability of a product.

HALT tries to "kill many unknown birds with one (also not very well known) stone". There is a general perception that HALT might be able to quickly precipitate and identify failures of different origins. HALT has demonstrated, however, over the years its ability to improve robustness through a "test-fail-fix" process, in which the applied stresses (stimuli) are somewhat above the specified operating limits. This "somewhat above" is based, however, on an intuition, rather than on a calculation. FOAT and HALT could be carried out separately, or might be partially combined in a particular accelerated test effort. Since the principle of superposition does not work in reliability engineering, both HALT and FOAT use, when appropriate, combined stressing under various stimuli (stressors). It is always necessary to correctly identify the expected failure modes and mechanisms, and to establish the appropriate stress limits of HALTs and FOATs with an objective to prevent "shifts" in the dominant failure mechanisms. There are many ways of how this could be done (see, e.g., [35]).

New products present natural reliability concerns, as well as significant challenges at all the stages of their design, manufacture and use. An appropriate combination of HALT and FOAT efforts could be especially useful for ruggedizing and quantifying reliability of such products.

Deterministic and probabilistic approaches in the design for reliability of electronic products: Design for reliability is, as is known, a set of approaches, methods and best practices that are supposed to be used at the design stage of the electronic product to minimize the risk that the fabricated product might not meet the reliability objectives and customer expectations.

When deterministic approach is used, reliability of a product could be based on the belief that sufficient reliability level will be assured if a high enough safety factor (SF) is used. The deterministic SF is defined as the ratio of the capacity ("strength") C of the product to the demand ("stress") D. The PDfR SF is introduced as the ratio of the mean value of the safety margin to its standard deviation ŝ, so that the probabilistic safety factor is evaluated as . When the random time-to-failure (TTF) is of interest, the SF can be found as the ratio of the MTTF to the standard deviation of the TTF. The use of SF as a measure of the probability of failure (PoF) is more convenient than the direct use of the PoF itself. This is because this probability is expressed, for highly reliable and, hence, typical electronic products, by a number, which is very close to one, and, for this reason, even significant changes in the product's design, with an appreciable impact on its reliability, might have a minor effect on the level of the PoF, at least the way it appears to and perceived by the user. The SF tends to infinity, when the probability of non-failure tends to one. The PoF (the level of the SF) should be chosen depending on the experience, anticipated operation conditions, possible consequences of failure, acceptable risks, the available and trustworthy information about the capacity and the demand, the accuracy, with which the capacity and the demand are determined, possible costs and social benefits, information on the variability of materials and structural parameters, fabrication technologies and procedures, etc.

Some simple PDfR examples

Adequate heat sink: Consider a device whose steady-state operation is determined by the Arrhenius equation. The probability of non-failure can be found using the exponential law of reliability as

Solving this equation for the absolute temperature T, we have [67]:

Addressing, e.g., surface charge accumulation related failure, for which the ratio of the activation energy to the Boltzmann's constant is , assuming that the FOAT- predicted time factor is hours, that the customer requires that the probability of failure at the end of the device's service time of hours is only , the above formula yields: . Thus, the heat sink should be designed accordingly, and the vendor should be able to deliver such a heat sink. The situation changes to the worse, if the temperature of the device changes, especially in a random fashion. This situation can also be predicted by a simple probabilistic analysis, which is, however, beyond the scope of this analysis (see [72]).

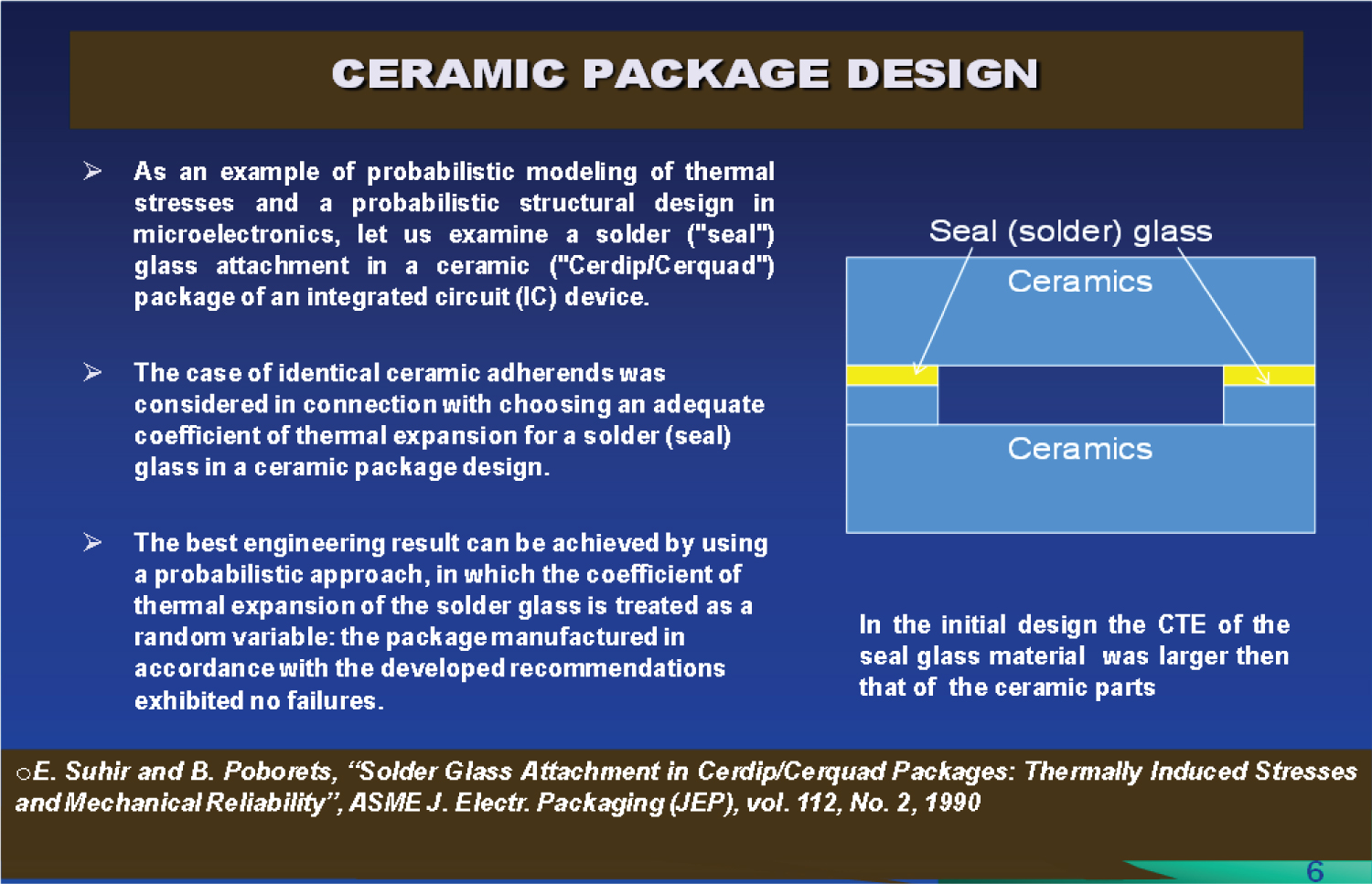

Reliable seal glass: The maximum interfacial shearing stress in the thin solder glass layer (Figure 1) can be computed by the formula [15]: . Here is the parameter of the interfacial shearing stress, is the assembly's axial compliance that is calculated as a sum of the axial compliances of its two constituents, is its interfacial compliance, and are the shear moduli of the ceramics and the glass materials, is the maximum normal stress in the mid-portion of the glass layer, is the change in temperature from the soldering temperature to the low (room or testing) temperature, is the difference in the effective coefficients of thermal expansion (CTEs) of the ceramics and the glass, are these coefficients for the given temperature t, t0 is the annealing (zero stress, setup) temperature, and are the time dependent CTEs for the materials in question. In an approximate analysis one could assume that the axial compliance α of the assembly is due to the glass only, so that and therefore the maximum normal stress in the solder glass is . While the geometric characteristics of the assembly, the change in temperature and the elastic constants of the materials can be determined with high accuracy, this is not the case for the difference in the CTEs of the brittle materials of the glass and the ceramics. In addition, because of the obvious incentive to minimize this difference, such a mismatch is characterized by a small difference of close and appreciable numbers. This contributes to the uncertainty of the problem in question justifies the application of the probabilistic approach.

Treating the CTEs of the two materials as normally distributed random variables, we evaluate the probability P that the thermal interfacial shearing stress is compressive (negative) and, in addition, does not exceed a certain allowable level [15]. This stress is proportional to the normal stress in the glass layer, which is, in its turn, proportional to the difference of the CTE of the ceramics and the glass materials, one wants to make sure that the requirement

takes place with a very high probability. For normally distributed random variables and the variable is also distributed in accordance with the normal law with the mean value and standard deviation as and , where and are the mean values of the materials' CTEs, and and are their variances. The expression

defines the probability that the above condition takes place. Here

is the error function, is the safety factor (SF) for the CTE difference and is the SF for the acceptable level of the allowable stress. If, e.g., the elastic constants of the solder glass are and the sealing (fabrication) temperature is 485 ℃ the lowest (testing) temperature is -65 ℃ (so that ∆t = 550 ℃), the predicted effective CTE's at this temperature are and , the standard deviations of these STEs are and the (experimentally determined) ultimate compressive strength for the given glass material is With the acceptable SF of, say, 4, we have . The calculated allowable level of the CTE-difference parameter is

The mean value and the variance of this parameter are and , respectively. Then the predicted actual and acceptable SFs associated with the thermal mismatch of the materials in question are and , respectively, and the probability of non-failure of the seal glass material is

Note that if the standard deviations of the materials CTEs were, say, , then the SFs of importance would be much higher: and , and the probability of non-failure would be as high as .

Extreme response to temperature cycling: Let an electronic device be operated in temperature cycling conditions, and the random amplitude of the induced thermal stress, when a single cycle is applied is distributed in accordance with the Rayleigh law, so that the probability density function of the stress amplitude is

And what is the most likely extreme value of this amplitude for a large number n of cycles? The probability distribution density function and the probability distribution function for the extreme value Yn of the stress amplitude are expressed as

and

respectively (see, e.g., [145]). Considering the second formula, the first one results in the following expression for the probability distribution density function:

Where is the sought dimensionless amplitude of the induced thermal stress. Applying the condition the following equation can be obtained:

If the number n of cycles is significant, the second term in this expression is small in comparison with the first term and can be omitted, so that and, hence, . As evident from this result, the ratio of the extreme response , after n cycles are applied, to the response , when a single cycle is applied, is . This ratio is 3.2552 for 200 cycles, 3.7169 for 1000 cycles, and 4.1273 for 5000 cycles. With, say, one cycle per day, these correspond to 6.7 months, 2.8 years, and 13.7 years.

BAZ model

Possible way to quantify and assure reliability: The simplest Boltzmann-Arrenius-Zhurkov (BAZ) equation [13,38,39,50,65,71] has been suggested in 1957 by the Russian physisist S.N. Zhurkov in application to experimental fracture mechanics as a generalization of the Arrhenius equation introduced in 1889 by the Swedish chemist S. Arrhenius in the kinetic theory of chemical reactions (1903 Nobel Prize in chemistry). The BAZ and Arrhenius equations consider the role of the ratio of the activation energy (this term was coined by Arrhenius to characterize material's propensity to get engaged into a chemical reaction) to the thermal energy determined as the product of the Boltzmann's constant and the absolute temperature In these equations, is interpreted as the mean time to failure (MTTF), is the experimental time constant, is the applied stress per unit volume, and is the sensitivity factor. Arrhenius equation is formally not different of what is known as Boltzmann's or Maxwell-Boltzmann's equation in the kinetic theory of gases. This equation postulates that the absolute temperature of an ideal gas, when it is in thermodynamic equilibrium with the environment, is determined by the average probability of the collisions of the gas particles (atoms or molecules). Chemist Arrhenius was member of physicist Boltzmann's team in the University of Graz in Austria in 1887 and suggested that Boltzmann's equation be used to assess the significance of the energy barrier, the activation energy, to be got over in order to trigger a chemical reaction. Although Arrhenius equation has been criticized over the years on several grounds (it has been argued, particularly, that this energy might not be a constant property of a material, but might be time- and/or temperature-dependent), it is still widely used, mostly because of its simplicity, in numerous applied science applications, when it is believed that it is the elevated temperature that is primarily responsible for the duration of the useful lifetime of a material or a device of interest.

The effective activation energy

plays in the BAZ equation the same role as the stress-free energy plays in the Arrhenius equation. It has been recently shown [39] that these equations can be obtained as steady-state solutions to the Fokker-Planck equation in the theory of Markovian processes (see, e.g., [145]) and that these solutions represent the worst case scenarios, so that the predictions based on the steady-state BAZ model are reasonably conservative and, hence, advisable in engineering applications.

Zhurkov and his associates used the BAZ equation to determine the fracture toughness of a large number of materials experiencing combined action of elevated temperature and external mechanical loading. While Arrhenius equation, when used to determine the lifetime of a solid, considers only the effect of the elevated temperature on its lifetime, BAZ equation takes into account also the role of the applied mechanical stress. While the elevated temperature affects the long-term reliability of the material (its aging/degradation), the mechanical stress might cause its short-term failure. In addition, in Zhurkov's tests the loading was always a constant mechanical tensile stress, and, because fracture mechanics does not address the initiation of cracks, but only their propagation, the test specimens were always notched ones. It has been recently suggested [53-55] that when the performance of an electronic or a photonic material is considered, any other loading of importance (voltage, current, thermal stress, humidity, vibrations, radiation, light output, etc.) can also be used as an appropriate stressor/stimulus, and, since the superposition principle cannot be employed in reliability engineering, that even a combination of relevant stimuli can be considered, so that a multi-parametric BAZ equation could be employed to evaluate the lifetime of a material or a product.

The use of the BAZ equation has been suggested as a possible physics-of-failure oriented kinetic model in connection with the development of the PDfR concept [25,36,46,48,53,62-65,67,68,70,73,86,88,96] for E&P materials, devices, assemblies, packages and systems to quantify, on the probabilistic basis, the operational lifetime of an E&P product using the results of highly-focused and highly cost-effective failure-oriented-accelerated-testing (FOAT) [22,48,56,58,62]. Such a multi-parametric BAZ equation has been recently employed in application to several critical E&P reliability physics problems. Examples are: an electronic package subjected to the combined action of two or more stressors (such as, say, elevated humidity and voltage) [22]; three-step concept (TSC) in modeling reliability [50]; static fatigue (delayed fracture) of optical silica fibers [71]; low-cycle fatigue of solder joint interconnections [80]; long-term reliability of IC devices [83,85,86,88,91,96] and the BIT [94,98,102] in E&P manufacturing.

The value is viewed in the Boltzmann's equation and in BAZ model

as the mean-time-to-failure (MTTF). This suggests that when the exponential law of the reliability that defines the probability of non-failure

is used, the MTTF corresponds to the moment of time when the entropy of this double-exponential distribution reaches its maximum value. Indeed, from the equation it could be found that the function reaches its maximum for the probability of non-failure

In such a situation the above distribution yields: Comparing this result with the Arrhenius or BAZ equation one concludes that the MTTF expressed by this equation corresponds to the moment of time when the entropy of the process is the largest and is equal to as well.

Note that the above formulation of entropy is different of both Boltzmann's and Shannon's formulations. Boltzmann's entropy in thermodynamics is a quantitative measure of disorder, or of the energy in a system to do work. Shannon's entropy in the communication theory is the probability of character number appearing in the stream of characters of the communication message. It has been recently demonstrated [154] that this definition of entropy could be employed also in some human psychology problems, when there is a need to quantify the role of the human capacity factor, and particularly the role of trust to and trustworthiness of an individual, a concept or a technology.

From the BAZ equation, considering that the probability of failure is we have: . This relationship explains the physical meaning of the BAZ equation: the degree of degradation (aging) of the material or the population of E&P products of interest is proportional to the entropy of the process and is inversely proportional to time.

It has been suggested (see, e.g., [25]) that when information (experimental data) about the lifetime of a particular E&P material or a device in the given environmental/test conditions is available, the time constant in the above distribution could be replaced, having in mind subsequent reliability evaluations, by a quantity where t is time, C is a suitable criterion of failure (such as, say, elevated leakage current or high electrical resistance in FOAT in E&P engineering), the above double-exponential distribution for the probability of non-failure can be replaced by the expression

which, in the case of multiple FOAT stressors, can be generalized as

It should be emphasized that the sum in this expression does not mean that the superposition principle is used. It is just a convenient way to consider the input of different loading to the outcome of FOAT.

Let us show how the multi-parametric BAZ model could be employed using, as an example, a situation when the product of interest is subjected to the combined action of the elevated relative humidity and the elevated voltage V, and let us assume that the failure rate of the product of interest is determined by the level of the leakage current, so that The probability of the product's non-failure can be sought in this case using the following expression for the probability of non-failure:

Here the factors reflect the sensitivities of the device under test to the change in the corresponding stressors. Although only two stressors are selected here - the relative humidity and the elevated voltage - the model can be easily made multi-parametric, i.e., generalized for as many physically meaningful stimuli as necessary. The sensitivity factors should be determined from the FOAT when the combined action of all the stimuli (stressors) of importance is considered.

The physical meaning of the above distribution could be seen from the formulas

where is the entropy of the probability of non-failure. The following two conclusions can be made based on these formulas: 1) The change in the probability of non-failure always increases with an increase in the entropy (uncertainty) of the distribution and decreases with an increase in the leakage current and with time; 2) The last two formulas show the physical meaning of the sensitivity factors : these factors are ratios of the change in the probability of non-failure with respect to the corresponding stimulus to the change of this probability with the change in the stress-free activation energy.

The governing equation for the probability of non-failure contains four empirical parameters: the stress-free activation energy and three sensitivity factors : Leakage-current factor, relative-humidity factor and elevated-voltage factor. Here is how these factors could be obtained from the FOAT data. First one should run the FOAT for two different temperatures and keeping the levels, low or high, of the relative humidity and elevated voltage the same in both tests; recording the percentages (values) and of non-failed samples (or values and of the failed samples); and assuming a certain criterion of failure (say, when the level of the measured leakage current exceeds a certain level Then the relationships

for the probabilities of non-failure can be obtained. Since the numerators in these relationships are kept the same, the following equation must be fulfilled for the sought sensitivity factor :

Here and are the times, at which the failures were detected. It is expected that more than just two series of FOAT tests and at more than two temperature levels are considered, so that the sensitivity parameter is predicted with a high enough accuracy. At the second step, FOAT at two relative humidity levels and should be conducted for the same temperature and voltage. This leads to the relationship:

Similarly, at the third step of tests, by changing the voltages and , the expression

for the sensitivity factor can be obtained:. The stress-free activation energy can be computed, for any consistent humidity, voltage, temperature and time, as

The above relationships could be obtained particularly also for the case of zero voltage, i.e., without a high-voltage bias. This will provide additional information of the materials and device reliability characteristics.

Let, e.g., the following input information is available from FOAT:1) After of testing at the temperature , the voltage V = 600V and the relative humidity H = 0.85, 10% of the tested modules exceeded the allowable (critical) level of the leakage current of and, hence, failed, so that the probability of non-failure is 2) After of testing at the temperature at the same voltage and the same relative humidity, 20% of the tested samples reached or exceeded the critical level of the leakage current and, hence, failed, so that the probability of non-failure is Then the sensitivity factor can be obtained from the equation:

This equation yields: so that . A more accurate solution can be always obtained by using Newton iterative method for solving transcendental equations. This concludes the first step of testing. At the second step, FOAT at two relative humidity levels, and , were conducted for the same levels of temperature and voltage. Then the sensitivity factor is

Let, e.g., after of testing at the relative humidity of at the given voltage (say, V = 600 V) and temperature (say, ), 5% of the tested modules failed, so that , and after of testing at the same temperature and at the relative humidity of , 10% of the tested modules failed, so that . Then the above equation for the value, with the Boltzmann constant yields: . At the third step, FOAT at two different voltage levels and has been carried out for the same temperature-humidity bias, say, and , and it has been determined that 10% of the tested devices failed after of testing () and 20% of devices failed after of testing (). The voltage sensitivity factor is

After the sensitivity factors are found, the stress free activation energy can be determined for the given temperature and for any combination of the loadings (stimuli). Calculations indicate that the stress free activation energy in the above numerical example (even with the rather tentative, but still realistic, input data) is about

This result is consistent with the existing experimental data for IC devices. Indeed, for semiconductor device failure mechanisms the activation energy ranges from 0.3 to 0.6eV, for metallization defects and electro-migration in Al it is about 0.5eV, for charge loss it is on the order of 0.6 eV, for Si junction defects it is 0.8 eV.

The total cost of reliability could be quantified and even minimized

Let us show [47], using rather elementary reasoning, how the total cost of an IC product associated with reliability (dependability) on one hand and cost-effectiveness on the other could be minimized. The cost of achieving and improving reliability can be estimates based on the exponential formula , where is the actual level of the MTTF, is the specified MTTF level, is the cost of achieving the level of reliability and is the cost factor associated with reliability improvements. Similarly, let us assume that the cost of reliability repair can be also assessed by the exponential formula , where is the cost of restoring the product's reliability, and is the factor of the reliability restoration (repair) cost. The latter formula reflects a natural assumption that the cost of repair is lower for a product of higher reliability. The total cost has its minimum

when the minimization condition is fulfilled. Let us further assume that the factor of the reliability improvement cost is inversely proportional to the MTTF (dependability criterion), and the factor of the reliability restoration cost is inversely proportional to the mean time to repair MTTR (reparability criterion). Then the minimum total cost is

where the availability

is the probability that the product is sound and is available to the user any time at the steady-state operations. In this formula is the mean TTF and is the mean time to repair. The above result obtained for the total minimum cost establishes, in an elementary way, the relationship between the minimum total cost of achieving and maintaining (restoring) the adequate reliability level and the availability criterion. The obtained relationship quantifies the intuitively obvious fact that the total cost of the product depends on both the total cost and the availability of the product.

The formula

that follows from the above derivation indicates that if the availability index is high, the ratio of the cost of repairs to the cost aimed at improved reliability is low. When the availability index is low, this ratio is high. This intuitively obvious result is quantified by the obtained simple relationship. The above reasoning can be used to interpret the availability index from the cost-effectiveness point of view: the index reflects, in effect, the ratio of the cost of improving reliability to the minimum total cost of the product associated with its reliability level. This and similar, even elementary, models can be of help, particularly, when there is a need to minimize costs without compromising reliability, i.e., in various optimization analyses.

Possible next generation of the QTs

The application of FOAT, the PDfR concept and particularly the multi-parametric BAZ model enables improving dramatically the state of the art in the field of the E&P products reliability predictions and assurances. Since FOAT cannot do without simple, easy-to-use and physically meaningful predictive modeling, the role of such modeling, both computer-aided and analytical (mathematical), in making the suggested new approach to QT practical and successful is paramount. It is imperative also that the reliability physics that underlies the mechanisms and modes of failure is well understood. Such an understanding can be achieved only provided that flexible, powerful and effective PDfR effort is implemented. The next generation QT could be viewed as a "quasi-FOAT," "mini-FOAT", a sort-of an "initial stage of FOAT" that more or less adequately replicates the initial non-destructive, yet full-scale, stage of FOAT conducted and agreed upon when the particular manufacturing technology of importance is developed. The duration and conditions of such a "mini-FOAT" QT could and should be established based on the observed and recorded results of the actual, pre-manufacturing, FOAT, and the actual QT should be limited to the stage when no failures, or a predetermined and acceptable small number of failures in the actual, full-scale, FOAT, was conducted and analyzed.

Various suitable PHM technologies could be concurrently tested as useful "canaries" to make sure that the safe limit is established correctly and is not exceeded. Such an approach to qualify electronic devices into products will enable the industry to specify, and the manufacturers - to assure, a predicted and adequate probability of failure for an E&P product that passed the QT and is expected to be operated in the field under the given conditions for the given time. The appropriate highly focused and highly cost-effective FOAT should be thoroughly designed, implemented, and analyzed, so that the QT of a product if importance is based on the trustworthy FOAT data.

Three-step concept in prognostics-and-health monitoring (PHM) engineering

When encountering a particular reliability problem at the design, fabrication, testing, or an operation stage of a product's life, and considering the use of predictive modeling to assess the seriousness and the likely consequences of the a detected failure, one has to choose whether a statistical, or a physics-of-failure-based, or a suitable combination of these two major modeling tools should be employed to address the problem of interest and to decide on how to proceed. A three-step concept [50] is suggested as a possible way to go in such a situation. The classical statistical Bayes formula can be used at the first step in this concept as a technical diagnostics tool. Its objective is to identify, on the probabilistic basis, the faulty (malfunctioning) device(s) from the obtained signals ("symptoms of faults").

The physics-of-failure-based BAZ model and particularly its multi-parametric extension can be employed at the second step to assess the RUL of the faulty device(s). If the RUL is still long enough, no action might be needed; if it is not, corrective restoration action becomes necessary. In any event, after the first two steps are carried out, the device is put back into operation (testing), provided that the assessed probability of its continuing failure-free operation is found to be satisfactory. If an operational failure nonetheless occurs, the third step should be undertaken to update reliability. Statistical beta-distribution, in which the probability of failure is treated as a random variable, is suggested to be used at this step.

While various statistical methods and approaches, including Bayes formula and beta-distribution, are well known and widely used in numerous applications for many decades, the BAZ model was introduced in the microelectronics reliability area only several years ago. Its attributes and use are addressed and discussed therefore in some detail. The suggested concept is illustrated by a numerical example geared to the use of the highly popular today prognostics-and-health-monitoring (PHM) effort in actual operation, such as, e.g., en-route flight mission.

Electron device subjected to temperature cycling

Predicted time-to-failure: Using the BAZ model, the probability of non-failure of a vulnerable material, such as, e.g., solder joint interconnection experiencing inelastic strains during temperature cycling, can be sought as

.

Here is the activation energy and is the characteristic of the solder material's propensity to fracture, is the damage caused by a single temperature cycle and measured, in accordance with Hall's concept [109], by the hysteresis loop area of a single temperature cycle for the strain of interest, is the absolute temperature (say, the cycle's mean temperature), is the number of cycles, is Boltzmann's constant, , is time, is the measured (monitored) electrical resistance at the peripheral joint location, and is the sensitivity factor for the resistance. It could be shown that the MTTF expressed as

Mechanical failure, associated with temperature cycling, takes place, when the number of cycles is When this condition takes place, the temperature in the denominator in the parentheses of the above BAZ equation becomes irrelevant, and this equation yields: where is the measured probability of non-failure for the situation when failure occurred because of temperature cycling, and is the MTTF. If, e.g., 20 devices have been temperature cycled and the high resistance considered as an indication of failure was detected in 15 of them, then If the number of cycles during such FOAT was, say, and each cycle lasted for 20min = 1200sec., then the time at failure is , and the sensitivity factor and the can be determined as

and

According to Hall's concept [109], the energy of a single cycle should be evaluated, by running a specially designed test, in which strain gages should be used. Let, e.g., in the above tests this energy (the area of the hysteresis loop) was Then the stress-free activation energy for the solder material is

To assess the number of cycles to failure in actual operation conditions one could assume that the temperature range in these conditions is, say, half the accelerated test range, and that the area of the hysteresis loop is proportional to the temperature range. Then the number of cycles to failure is

If the duration of one cycle in actual operation conditions is one day, then the time to failure will be

Role of the human factor (HF)

PPM concept in HF related situations

Human error contributes to about 80% of vehicular (aerospace, maritime, railroad, automotive) casualties (see, e.g., [110-126]). Ability to understand their nature and minimize their likelihood is of obvious and significant importance. While considerable improvements in various vehicular technologies and other HF related missions and situations can be achieved through better ergonomics, better work environment, and other traditional means that directly affect human behaviors and performances, there is also an opportunity (potential) for a further reduction in vehicular and other HF related casualties through better understanding the role that various uncertainties play in the designer's and operator's world of work. By employing quantifiable and measurable ways to assess the role of these uncertainties and by treating HITL as a part, often the most critical part, of the complex man-instrumentation-equipment-vehicle-environment system, one could improve dramatically the human's performance, to predict, minimize and, when possible and appropriate, even specify the probability of the occurrence of a never-completely-avoidable casualty. It is the author's belief that adequate human performance cannot be assured, if it is not quantified and, since nobody is perfect, that such quantification should be done on the probabilistic basis. In effect, the only difference between what is perceived as a failure-free and an unsatisfactory human performance is, in effect, the difference in the levels of the never-zero probability of human failure. Application of the quantitative PPM concept should complement in various HF related situations, whenever feasible and possible, the existing human psychology practices, which are, as a rule, qualitative a-posteriori statistical assessments. A PPM approach based particularly on the DEPDF is a suitable quantitative technique for assessing the probability of the human non-failure (HnF) in various aircraft missions and off-normal flight situation. The long-term HCF is considered below vs. the (elevated) short-term MWL that the human has to cope with to successfully withstand an off-normal (emergency) situation.

The famous 2009 US Airways "miracle-on-the-Hudson" successful ditching and the infamous 1998 Swiss Air "UN-shuttle" disaster are chosen to illustrate the usefulness and fruitfulness of the approach. The input data are hypothetical, but not unrealistic. And it is the approach, and not the numbers, that is, in the author's opinion, the merit of the study. As the co-inventor of the calculus, the great German mathematician Gottfried Leibnitz put it, "there are things in this world, far more important than the most splendid discoveries-it is the methods by which they were made." It is shown that it was the exceptionally high HCF of the US Airways crew and especially that of its captain Sullenberger that made a reality what seemed to be, at the first glance, a "miracle". It is shown also that the highly professional and, in general, highly qualified Swiss Air crew exhibited inadequate performance (quantified in our analysis as a relatively low HCF level) in the much less challenging off-normal situation they encountered with. The Swiss Air crew made several serious errors and, as a result, crashed the aircraft. In addition to the DEPDF based approach, we show, using a convolution approach in the applied probability, that the probability of safe landing can be evaluated by comparing the (random) operation time (that consists of the decision making time and the actual landing time) with the "available" anticipated time needed for landing. It is concluded that the developed formalisms, after trustworthy input data are obtained (using, e.g., flight simulators [119] or by applying Delphi method (see, e.g. [145]) might be applicable even beyond the vehicular domain and can be employed in various HITL situations, when a long term high HCF is imperative and the ability to quantify it in comparison with the short term MWL is highly desirable. It is concluded also that, although the obtained numbers make physical sense, it is the approach, not the numbers, that is, in the author's opinion, the main merit of the paper.

In the analysis below we show, as an example, how the double-exponential probability distribution function (DEPDF) could be applied for the evaluation of the likelihood of a human non-failure in an emergency vehicular mission-success-and-safety situation. The famous 2009 "miracle-on-the-Hudson" event and the infamous 1998 "UN-shuttle" disaster [131] are used to illustrate the substance and fruitfulness of the approach. We try to shed "probabilistic light" on these two well-known events. As far as the "miracle-on-the-Hudson" is concerned, we intend to provide quantitative assessments of why such a "miracle" could have actually occurred, and what had been and had not been indeed a "miracle" in this incident: a divine intervention, a perceptible interruption of the laws of nature, or "simply" a wonderful and rare occurrence that was due to a heroic act of the aircraft crew and especially of its captain Sullenberger ("Sully") the lead "miracle worker" in the incident. As to the "UN-shuttle" crash, we are going to demonstrate that the crash occurred because of the low HCF of the aircraft crew in an off-normal situation that they had encountered and that was, in effect, much less demanding than the "miracle-on-the-Hudson" situation.

MWL vs. HCF: A way to quantify human performance

In the simplest model such a failure should be attributed to an insufficient human capacity factor (HCF), when he/she has to cope with a high cognitive (mental) workload (MWL). Our suggested MWL/HCF models and their possible modifications and generalizations can be helpful, after appropriate sensitivity factors are established and sensitivity analyses (SA) are carried out, 1) When developing guidelines for personnel selection and training; 2) When choosing the appropriate simulation conditions; and/or 3) When there is a need to decide, if the existing levels of automation and of the employed equipment (instrumentation) are adequate in off-normal, but not impossible, situations, and if not, 4) Whether additional and/or more advanced and perhaps more expensive equipment or instrumentation should be developed, tested and installed, so that the requirements and constraints associated with a mission or a situation of importance are met.

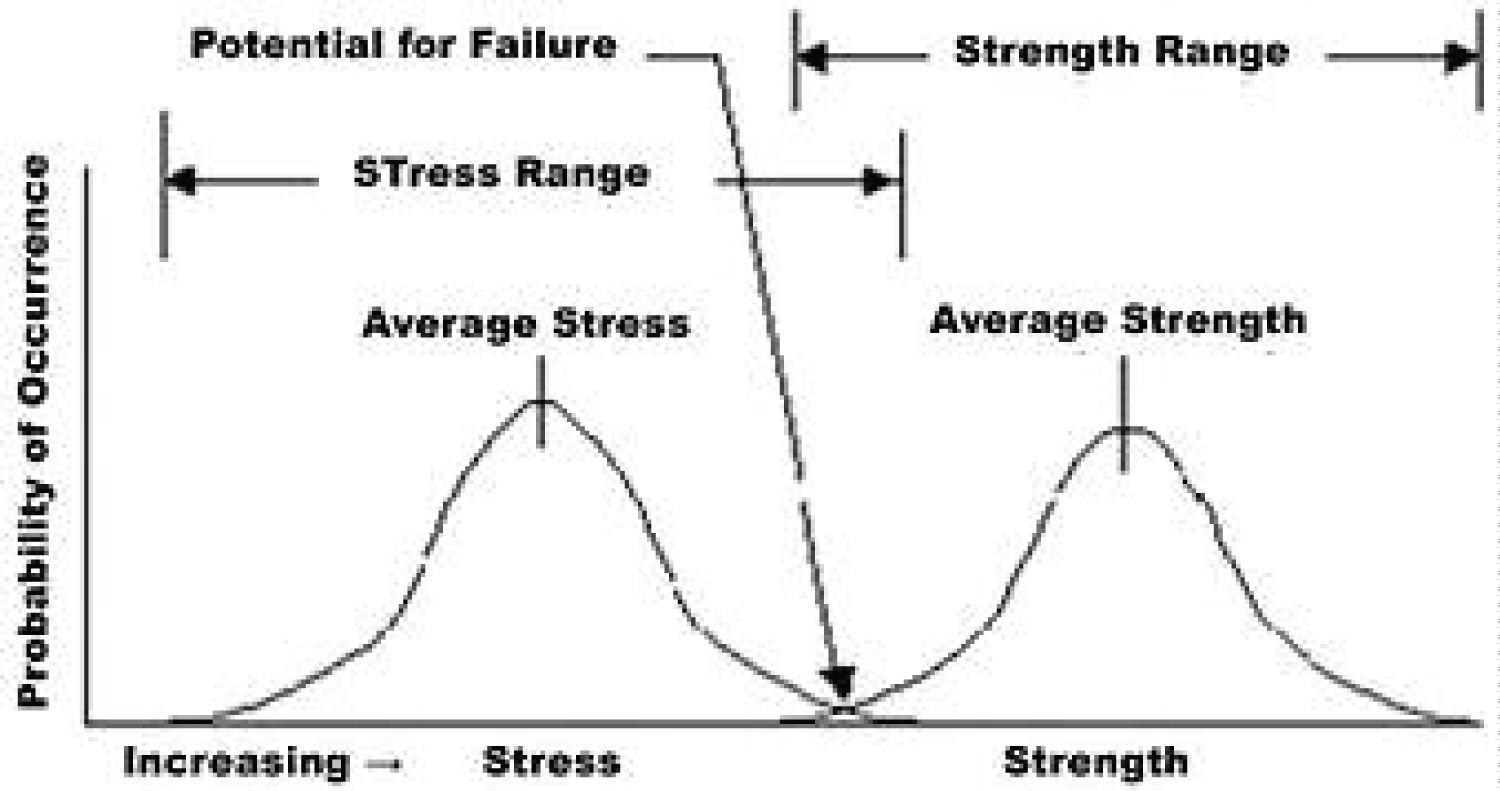

Our MWL/HCF based approach is, in effect, an attempt to quantify, on the probabilistic basis, using probabilistic risk management (PRM) techniques, the role that the human plays, in terms of his/her ability (capacity) to cope with a mental overload. Using an analogy from the reliability engineering field and particularly with the well known "stress-strength" interference model (Figure 2), the MWL could be viewed as a certain possible "demand" ("stress"), while the HCF - as an available or a required "capacity" ("strength"). The MWL level depends on the operational conditions and the complexity of the mission, i.e., has to do with the significance of the general task, while the HCF considers, but might not be limited to, the human's professional experience and qualifications, capabilities and skills; level and specifics of his/her training; performance sustainability; ability to concentrate; mature thinking; ability to operate effectively, in a "tireless" fashion, under pressure, and, if needed, for a long period of time (tolerance to stress); team-player attitude; swiftness in reaction, if necessary, etc., i.e., all the critical qualities that would enable him/her to cope with the high MWL. It is noteworthy that the ability to evaluate the "absolute" level of the MWL, important as it might be for numerous existing non-comparative evaluations, is less critical in our approach: it is the comparative levels of the MWL and the HCF, and the comparative assessments and evaluations that are important in our approach. The author does not intend to come up with an accurate, complete, ready-to-go, "off-the-shelf"-type of a methodology, in which all the i's are dotted and all the t's are crossed, but intends to show how the powerful and flexible PRM methods could be effectively employed to quantify the role of the human factor by comparing, on the probabilistic basis, the actual and/or possible MWL and the available or required HCF levels, so that the adequate and sufficient safety factor is assured.

In this section the famous "miracle-on-the-Hudson" event is used as a suitable example to illustrate the concept in question. We believe that the taken approach, with the appropriate modifications and generalizations, is applicable to many HITL situations, not necessarily in the vehicular domain, when a human encounters an uncertain environment and/or a hazardous situation and/or interacts with never perfect hardware and software. The author realizes that his approach might not be accepted easily by some traditional human psychologists. They might feel that the problem is too complex to lend itself to any type of formalized quantification. With this in mind we are suggesting possible next steps (future work) that could be conducted using, when necessary, flight simulators to correlate the suggested probabilistic models with the existing practice. Testing on a flight simulator is analogous to the highly-accelerated life testing (HALT) and particularly failure-oriented-accelerated testing (FOAT) in electronics and photonics reliability engineering.

The famous "Miracle-on-the-Hudson" event is chosen in this section to illustrate the possible application of the MWL-HCF bias in the HITL related missions and situations. It is important to emphasize that this is merely an illustration on how these two major aspects of the HITL related situation could be treated, and not to show, in a rather tentative fashion, why indeed Capt. Sullenberger was successful in an extraordinary situation, where other navigators may or may not be. As Gottfried Leibnitz, the famous German mathematician put it, "there are things in this world, far more important than the most splendid discoveries - it is the methods by which they were made".

HCF vs. MWL approach

"The ten commandments" of the HCF vs. MWL approach: Here are the major principles ("ten commandments") of our PRM-based approach in the HF related tasks:

1. HCF is viewed in this approach as an appropriate quantitative measure (not necessarily and not always probabilistic though) of the human ability to cope with an elevated short term MWL;

2. It is the relative levels of the MWL and HCF (whether deterministic or random) that determine the probability of human non-failure in a particular HITL situation;

3. Such a probability cannot be low, but need not be higher than necessary either: it has to be adequate for a particular anticipated application and situation;

4. When adequate human performance is imperative, ability to quantify it is highly desirable, especially if one intends to optimize and assure adequate HITL performance;

5. One cannot assure adequate human performance by just conducting routine today's human psychology based efforts (which might provide appreciable improvements, but do not quantify human behavior and performance; in addition, these efforts might be too and unnecessarily costly), and/or by just following the existing "best practices" that are not aimed at a particular situation or an application; the events of interest are certainly rare events, and "best practices: might or might not be applicable;

6. MWLs and HCFs should consider, to an extent possible, the most likely anticipated situations; obviously, the MWLs are and HCFs should be different for a jet fighter pilot, for a pilot of a commercial aircraft, or for a helicopter pilot, and should be assessed and specified differently;

7. PRM is an effective means for improving the state-of-the-art in the HITL field: nobody and nothing is perfect, and the difference between a failed human performance and a successful one is "merely" in the level of the probability of non-failure;

8. Failure oriented accelerated testing (FOAT) on a flight simulator is viewed as an important constituent part of the PRM concept in various HITL situations: it is aimed at better understanding of the factors underlying possible failures; it might be complemented by the Delphi (experts' opinion) effort;

9. Extensive predictive modeling (PM) is another important constituent of the PRM based effort, and, in combination with highly focused and highly cost effective FOAT, is a powerful and effective means to quantify and perhaps nearly eliminate human failures;

10. Consistent, comprehensive and psychologically meaningful PRM assessments can lead to the most feasible HITL qualification (certification) methodologies, practices and specifications.

Mental workload (MWL): Our HCF vs. MWL approach considers elevated (off-normal) random relative HCF and MWL levels with respect to the ordinary (normal, pre-established) deterministic HCF and MWL values. These values could and should be established on the basis of the existing human psychology practices. The interrelated concepts of situation awareness and MWL ("demand") are central to the today's aviation psychology. Cognitive (mental) overload has been recognized as a significant cause of error in aviation. The MWL is directly affected by the challenges that a navigator faces, when controlling the vehicle in a complex, heterogeneous, multitask, and often uncertain and harsh environment. Such an environment includes numerous different and interrelated concepts of situation awareness: spatial awareness for instrument displays; system awareness for keeping the pilot informed about actions that have been taken by automated systems; and task awareness that has to do with the attention and task management. The time lags between critical variables require predictions and actions in an uncertain world. The MWL depends on the operational conditions and on the complexity of the mission. MWL has to do therefore with the significance of the long- or short-term task. The long-term MWL is illustrated in Figure 3.

Task management is directly related to the level of the MWL, as the competing "demands" of the tasks for attention might exceed the operator's resources - his/her "capacity" to adequately cope with the "demands" imposed by the MWL.

Measuring the MWL has become a key method of improving aviation safety. There is an extensive published work in the psychological literature devoted to the measurement of the MWL in aviation, both military and commercial. Pilot's MWL can be measured using subjective ratings and/or objective measures. The subjective ratings during FOAT (simulation tests) can be, e.g., after the expected failure is defined, in the form of periodic inputs to some kind of data collection device that prompts the pilot to enter a number between 1 and 10 (for example) to estimate the MWL every few minutes. There are some objective MWL measures, such as, e.g., heart rate variability. Another possible approach uses post-flight paper questionnaires. It is easier to measure the MWL on a flight simulator than in actual flight conditions. In a real aircraft, one would probably be restricted to using post-flight subjective (questionnaire) measurements, since one would not want to interfere with the pilot's work.

Given the multidimensional nature of MWL, no single measurement technique can be expected to account for all the important aspects of it. In modern military aircraft, complexity of information, combined with time stress, creates difficulties for the pilot under combat conditions, and the first step to mitigate this problem is to measure and manage the MWL. Current research efforts in measuring MWL use psycho-physiological techniques, such as electroencephalographic, cardiac, ocular, and respiration measures in an attempt to identify and predict MWL levels. Measurement of cardiac activity has been a useful physiological technique employed in the assessment of MWL, both from tonic variations in heart rate and after treatment of the cardiac signal.

Human capacity factor (HCF): HCF includes, but might not be limited to, the following major qualities that would enable a professional human to successfully cope with an elevated off-normal MWL. Some of them are: age; personality type; state of health and fitness; psychological suitability for a particular task (relevant capabilities and skills, and performance sustainability/ consistency/ predictability); professional experience and qualifications; education, both special and general; level, quality and timeliness of training; leadership ability; independent thinking and independent acting, when necessary; mature (realistic) thinking ability to concentrate; ability to anticipate; adequate level of self control and ability to act in cold blood in hazardous and even life threatening situations; ability to operate effectively under pressure, and particularly under time pressure; ability to make well substantiated decisions in a short period of time; tolerance to stress (ability to operate effectively, when necessary, in a tireless fashion, for a long period of time, including propensity to drowsiness); team-player attitude, when necessary; swiftness in reaction, when necessary; adequate trust (in humans, technologies, equipment); ability to maintain the optimal level of physiological arousal.

These and other qualities are certainly of different importance in different HITL situations. It is clear also that different individuals possess these qualities in different degrees. Long-term HCF could be time-dependent. These and other qualities are certainly of different importance in different HITL situations. It is clear also that different individuals possess these qualities in different degrees. Long-term HCF could be time-dependent. To come up with a suitable figures-of-merit (FOM) for the HCF, one could rank the above and perhaps other qualities on the scale from, say, one to four, and calculate the average FOM for each individual and particular task and/or a mission or a situation (see, e.g., Table 2, Table 3 and Table 4 below).

Application of the double-exponential probability distribution function (DEPDF): Different PRM approaches can be used in the analysis and optimization of the interaction of the MWL and HCF. When the MWL and HCF characteristics are treated as deterministic ones, a high enough safety factor can be used. When both MWL and HCF are random variables, the safety factor can be determined as the ratio of the mean value of the random safety margin to its standard deviation SSM.

When the capacity-demand ("strength-stress") interference model is used (Figure 2) the HCF can be viewed as the capacity (strength) and the MWL as the demand (stress), and their overlap area could be considered as the potential (probability) of possible human failure. The capacity and the demand distributions can be steady-state or transient, i.e., their mean values can move towards each other when time progresses, and/or the MWL and HCF curves can get spread over larger areas. Yet another PRM approach is to use a single distribution that accounts for the roles of the HCF and MWL, when these (random) characteristics deviate from (are higher than) their (deterministic) most likely (regular) values. It is this approach that is used in the analysis below. A function

which is a DEPD function, can be used to characterize the likelihood of a human non-failure to perform his/her duties, when operating a vehicle. Here is the probability of non-failure of the human performance as a function of the off-normal mental workload (MWL) and outstanding human capacity factor (HCF) , is the probability of non-failure of the human performance for the specified (normal) MWL and the specified (ordinary) HCF The specified (most likely, nominal, normal) MWL and HCF can be established by conducting testing and measurements on a flight simulator. The probabilities

are shown in Table 5. The following conclusions can be drawn from the table data:

1) At normal (specified, most likely) MWL level () and/or at an extraordinary (exceptionally) high HCF level the probability of human non-failure is close to 100%;

2) The probabilities of human non-failure in off-normal situations are always lower than the probabilities of non-failure in normal (specified) conditions;

3) When the MWL is extraordinarily high, the human will definitely fail, no matter how high his/her HCF is;

4) When the HCF is high, even a significant MWL has a small effect on the probability of non-failure, unless the MWL is exceptionally high. For high HCFs the increase in the MWL has a much smaller effect on the probabilities on failure than for relatively low HCFs;

5) The probability of human non-failure decreases with an increase in the MWL, especially at low MWL levels, and increases with an increase in the HCF, especially at low HCF levels. These intuitively more or less obvious conclusions are quantified by the Table 5 data.

These data show also that the increase in the probability ratio above 3.0 ("three is a charm" in this case) has a minor effect on the probability of non-failure. This means particularly that the navigator (pilot) does not have to be trained for an unrealistically high MWL, i.e., does not have to be trained by a factor higher than 3.0 compared to a navigator of ordinary capacity (skills, qualification). In other words, a pilot does not have to be a superman to successfully cope with a high level MWL, but still has to be trained in such a way that, when there is a need, he/she would be able to cope with a MWL by a factor of 3.0 higher than the normal level, and his/her HCF should be by a factor of 3.0 higher than what is expected of the same person in ordinary (normal) conditions. Of course, some outstanding individuals (like, e.g., Captain Sullenberger) might have a HCF that corresponds to MWL's significantly higher than 3.0.

Let us determine the underlying physics for the introduced DEPDF. The MWL derivative of this distribution is as follows:

where is the entropy of the distribution. When the MWL is significant, this formula yields:

This result explains the physical meaning of the distribution in question: the change in the probability of human non-failure (provided that the probability of non-failure in normal conditions is simply 100%) with the change in the MWL is, for large MWL levels, proportional to the uncertainty level defined by the entropy of the distribution in question and is inversely proportional to the MWL level. The right part of the obtained formula can be viewed as a kind of coefficient of variation (COV), where the role of the uncertainty level in the numerator is played by the entropy (rather than by the standard deviation, which, as is known, is also, in a way, a measure of uncertainty) and the role of the stress (loading) level in the denominator is played by the MWL (rather than by the mean value of the random characteristic of interest). One could find also:

When the random HCF is equal to its nominal (low level) value , this formula yields:

This result can also be used to interpret the physics underlying the introduced DEPDF: the change in the probability of human non-failure with the change in the HCF at its nominal (normal) level is proportional to the entropy of this distribution and is inversely proportional to the nominal HCF. From the equation for the probability we obtain:

This relationship is tabulated in Table 6. The following conclusion can be drawn from the computed data: 1) The HCF level needed to cope with an elevated MWL increases rather slowly with an increase in the probability-of-non-failure, especially for high MWL levels, unless this probability is very low (below 0.1) or very high (above 0.9); 2) In the region the required high HCF level increases with an increase in the MWL level, but this increase is rather moderate, especially for high MWL levels; 3) Even for significant MWLs that exceed the normal MWL by orders of magnitude the level of the HCF does not have to be very much higher than the HCF of a person of ordinary HCF level. When the MWL ratio is as high as 100, the HCF ratio does not have to exceed 4 to assure the probability of non-failure of as high as 0.999.

Approach based on the convolution technique: Operation time vs. available time

The above time-independent DEPDF based approach enables one to compare, on the probabilistic basis, the relative roles of the MWL and HCF in a particular off-normal HF related situation. The role of time (e.g., swiftness in reaction) is accounted for in an indirect fashion, through the HCF level. In the analysis that follows we assess the likelihood of safe landing by considering the roles of different times directly, by comparing the operation time, which consists of the decision making time and actual landing time, with the "available" landing time (i.e., the time from the moment when an emergency was determined to the moment of landing). Particularly, we address the ability of the pilot to anticipate and to make a substantiated and valid decision in a short period of time (as Captain Sullenberger has put it, "We are going to be in the Hudson"). It is assumed, for the sake of simplicity, that both the decision making and the landing times could be approximated by the Rayleigh's law, while the available time, considering, in the case of the "miracle-on-the-Hudson" flight) the glider conditions of the aircraft, follows the normal law with a high ratio of the mean value to the standard deviation. Safe landing could be expected if the probability that it occurs during the "available" landing time is sufficiently high. The formalism of such a model is similar to the one taken in the helicopter-landing-ship (HLS) approach [127]. If the (random) sum, T = t + θ, of the (random) decision making time, t, and the (random) time, θ, needed to actually land the aircraft is lower, with a high enough probability, than the (random) duration, L, of the available time, then safe landing becomes possible (in the HLS problem it was the random time of the lull in the sea condition).

In the analysis that follows the Rayleigh's law

is used as a suitable approximation for the random times t and θ of decision making and actual landing, and the normal law