The Outcome of an Engineering Undertaking of Importance Must be Quantified to Assure its Success and Safety: Review

Abstract

The outcome a of crucial engineering undertaking must be quantified at the design/planning stage to assure its success and safety, and since the probability of an operational failure is, in effect, never zero, such a quantification should be done on the probabilistic basis. Some recently published work on the probabilistic predictive modeling (PPM) and probabilistic design for reliability (PDfR) of aerospace electronic and photonic (E&P) products, including human-in-the-loop (HITL) problems and challenges, is addressed and briefly reviewed. The effort was lately "brought down to earth" to model possible collision in automated driving (AD). In addition, some problems and tasks beyond the E&P and vehicular engineering field are also addressed with an objective to show how the developed methods and approached can be effectively and fruitfully employed, whenever there is a need to quantify the reliability of an engineering technology with consideration of the human performance.

Accordingly, the following nine problems have been addressed in this review with an objective to show how the outcome of a critical engineering endeavor can be predicted using PPM and PDfR concept: 1) Accelerated testing in E&P engineering: significance, attributes and challenges; 2) Failure-oriented accelerated testing (FOAT), its objective and role; 3) PPM approach and PDfR concept, their roles and applications; 4) Kinetic multi-parametric Boltzmann-Arrhenius-Zhurkov (BAZ) equation as the "heart" of the PDfR concept; 5) Burn-in-testing (BIT) of E&P products with an attempt to shed light on the basic "to BIT or not to BIT" question; 6) Adequate trust is an important constituent of the human-capacity-factor (HCF) affecting the outcome of a mission or an extraordinary situation; 7) PPM of an emergency-stopping situation in automated driving (AD) or on a railroad (RR); 8) Quantifying the astronaut's/pilot's/driver's/machinist's state of health (SoH) and its effect on his/hers performance; 9) Survivability of species in different habitats. The objective of the latter effort is to demonstrate that the developed PPM approaches and methodologies, and particularly those using multiparametric BAZ equation, could be effectively employed well beyond the vehicular engineering area.

The general concepts are illustrated by numerical examples. All the considered PPM problems were treated using analytical ("mathematical") modeling. The attributes of the such modeling, the background of the multiparametric BAZ equation and the ten major principles ("the ten commandments") of the PDfR concept are addressed in the appendices.

Acronyms

AD: Automated Driving; ASD: Anticipated Sight Distance; BAZ: Boltzmann-Arrhenius-Zhurkov (equation); BGA: Ball Grid Array; BIT: Burn-in Testing; BTC: Bathtub Curve; CTE: Coefficient of Thermal Expansion; DEPDF: Double-Exponential Probability Distribution Function; DfR: Design for Reliability; E&P: Electronic and Photonic; FOAT: Failure-Oriented-Accelerated-Testing; FoM: Figures of Merit; HALT: Highly-Accelerated-Life-Testing; HCF: Human Capacity Factor; HE: Human Error; HF: Human Factor; HITL: Human-in-the-Loop; IMP: Infant Mortality Portion (of the BTC); MTTF: Mean-Time-to-Failure; MWL: Mental Workload; NIH: "Not invented here"; PAM: Probabilistic Analytical Modeling; PDF: Probability Distribution Function; PDfR: Probabilistic Design for Reliability; PHM: Prognostics and Health Monitoring; PPM: Probabilistic Predictive Modeling; PRA: Probabilistic Risk Analysis; QT: Qualification Testing; RR: Railroad; RUL: Remaining Useful Life; SAE: Society of Automotive Engineers; SF: Safety Factor; SJI: Solder Joint Interconnections; SoH: State of Health; SFR: Statistical Failure Rate; TTF: Time-to-Failure

Introduction

Quo vadis?

St. Paul

Progress in vehicular safety is achieved today mostly through various, predominantly experimental and posteriori- statistical, ways to improve the hard- and software of the instrumentation and equipment, implement better ergonomics, and introduce and advance other more or less well established efforts of experimental reliability engineering and traditional human psychology that directly affect product's reliability and human performance. There exists, however, a significant potential for the reduction in accidents and casualties in aerospace, maritime, automotive and railroad engineering through better understanding the role that various uncertainties play in the planner's and operator's worlds of work, when never failure-free navigation equipment and instrumentation, never hundred percent predictable response of the object of control (air- or spacecraft, a car, a train, or an ocean-going vessel), uncertain-and-often-harsh environment and never-perfect human performance contribute jointly to the outcome of a vehicular mission or an extraordinary situation. By employing quantifiable and measurable ways of assessing the role and significance of critical uncertainties and treating HITL as a part, often the most crucial part, of a complex man-instrumentation-vehicle-environment-navigation system and its critical interfaces, one could improve dramatically the state-of-the-art in assuring operational safety of a vehicle and its passengers and crew. This can be done by predicting, quantifying and, if necessary and possible, even specifying an adequate (typically low enough, but different for different vehicles, missions and circumstances) probability of success and safety of a mission or an off-normal situation [1-19].

Nothing and nobody is perfect, and the difference between a highly reliable technology, object, product, performance or a mission and an insufficiently reliable one is "merely" in the levels of their never-zero probability of failure. Application of the PPM approach and the PDfR concept [20-31] provide a natural and an effective means for reduction of vehicular casualties. This approach, as has been indicated, can be applied also beyond the vehicular field, in devices whose operational reliability is critical, such as, e.g., military, long-haul communications systems or medical devices [32]. When success and safety of a critical undertaking are imperative, ability to predict and quantify its outcome is paramount. The application of the PDfR concept can improve dramatically the state-of-the-art in reliability and quality engineering by making the art of creating reliable products and assure adequate human performance into a well substantiated and "reliable" science. Tversky and Kahneman (1979 Nobel Memorial Prize in Economics) [33] where, perhaps, the first who indicated the importance of considering the role of uncertainties in decision making and, particularly, in analyzing the role of cognitive biases that affect decision making in life and work. Since, however, these investigators were, although outstanding, but traditional human psychiatrists, no quantitative, not to mention probabilistic, assessments, were suggested.

It should be pointed out that while the traditional statistical human-factor-oriented approaches are based mostly on experimentations followed by statistical analyses, an important feature of the PDfR concept is that it is based upon, and start with, a physically meaningful and flexible predictive model (such as the BAZ one) geared to the appropriate FOAT [34-37]. Statistics and/or experimentation can be applied afterwards, to establish the important numerical characteristics of the selected model (such as, say, the mean value and the standard deviation in a normal distribution) and/or to confirm the suitability of a particular model for the application of interest. The highly-focused and highly cost-effective FOAT, the "heart" of the PDfR concept, is aimed, first of all, at understanding and/or at confirming the anticipated physics of failure (see Table 1 below). The traditional, about forty-years-old, highly accelerated life testing (HALT), although sheds important light on the reliability of the E&P product of interest (bad things would not last for forty years, would they?), does not quantify reliability and, because of that could hardly improve our understanding of the device's and/or package's physics of failure. FOAT, geared to a physically meaningful PDfR model, can be used as an appropriate extension and modification of HALT. An important attribute of the PPM/PDfR/FOAT based approach is if the predicted probability of non-failure, based on the applied PDfR methodology and FOAT effort, is, for whatever reason, not acceptable, then an appropriate sensitivity analysis (SA) using the already developed and available algorithms and calculation procedures can be effectively conducted to improve the situation without resorting to additional expensive and time-consuming testing.

Such a cost-effective and insightful approach is applicable, with the appropriate modifications and generalizations, if necessary, to numerous, not even necessarily in the vehicular domain, when a human-in-control encounters an uncertain environment or a hazardous situation. The suggested quantification-based HITL approach is applicable also when there is an incentive to quantify human's qualifications and/or when there is a need to assess and possibly improve human performance and possible role in a particular engagement.

An important additional consideration in favor of quantification of the reliability has to do with the always desirable optimizations. The best engineering product is, in effect, as is known, the best compromise between the requirements for its reliability, cost effectiveness and time-to-market (to completion). The latter two requirements are always quantified. No effective optimization could be achieved, of course, if reliability is not optimized as well. In the HITL situations, such an optimization should be done considering the role of the human factor.

In the review that follows some important problems and tasks associated with assuring success and safety of vehicular and other engineering undertakings are addressed with an objective to show what could and should be done differently, when high reliability is imperative, and should be quantified to assure its adequate level and cost effectiveness. A simple example on how to optimize reliability [38] indicates that optimization of reliability can be achieved by optimizing the product's availability - the probability that the product is sound, i.e. available to the user, when needed. When encountering a particular reliability problem at the design, fabrication, testing, or an operation stage of the product's life, and considering the use of predictive modeling to assess the seriousness and the likely consequences of its detected failure, one has to choose whether a statistical, or a physics-of-failure-based, or a suitable combination of these two major modeling tools should be employed to address the problem of interest and to decide on how to proceed.

A three-step concept (TSC) is suggested as a possible way to go in such a situation [39,40]. The classical statistical Bayes formula can be used at the first step as a technical diagnostics tool, with an objective is to identify, on the probabilistic basis, the faulty (malfunctioning) device(s) from the obtained signals ("symptoms of faults"). The multi-parametric BAZ model can be employed at the TSC's second step to assess the remaining useful life (RUL) of the faulty device(s). If the assessed RUL is still long enough, no action might be needed, but if it is not, corrective restoration action becomes necessary. In any event, after the first two steps are carried out, the device is put back into operation, provided that the assessed probability of its continuing failure-free operation is found to be satisfactory. If failure nonetheless occurs, the third step should be undertaken to update reliability. Statistical beta-distribution, in which the probability of failure itself is treated as a random variable, is suggested to be used at this step. The suggested concept is illustrated by a numerical example geared to the use of the prognostics-and-health-monitoring (PHM) effort in actual operation, such as, e.g., en-route flight mission.

The major principles of an analytical modeling approach, the background and the attributes of the BAZ equation [41-44] and the major principles of the PDfR concept are summarized in Appendix A, Appendix B and Appendix C (the latter - in the form of "the ten commandments"), respectively.

Review

Accelerated testing in electronics and photonics: Significance, attributes and challenges

"Golden rule of an experiment: The duration of an experiment should not exceed the lifetime of the experimentalist".

Unknown experimental physicist

Accelerated testing is both a must and a powerful tool in E&P manufacturing. This is because getting maximum reliability information in minimum time and at minimum cost is the major goal of an E&P manufacturer, but also because it is impractical to wait for failures, when the lifetime of a typical today's E&P product manufactured using the existing "best practices" is hundreds of thousands of hours, regardless of whether this lifetime is or is not be predicted with sufficient accuracy. Different types of accelerated tests in today's E&P engineering are summarized in Table 1.

A typical example of product development testing (PDT) is shear-off testing conducted when there is a need to determine the most feasible bonding material and its thickness, and/or to assess its bonding strength and/or to evaluate the shear modulus of the material. HALT is currently widely employed, in different modifications, with an intent to determine the product's reliability weaknesses, assess its reliability limits, and ruggedize the product by applying elevated stresses (not necessarily mechanical and not necessarily limited to the anticipated field stresses) that could cause field failures, and to provide supposedly large (although, actually, unknown) safety margins over expected in-use conditions. HALT often involves step-wise stressing, rapid thermal transitions, and other means that enable to carry out testing in a time- and cost- effective fashion. HALT is a "discovery" test. It is not a qualification test (QT) though, i.e. not a "pass/fail" test. It is the QT that is the major means for making a viable E&P device or package into a justifiably marketable product. While many HALT aspects are different for different manufacturers and often kept as proprietary information, QTs and standards are the same for the given industry and product type. Burn-in testing (BIT) is a post-manufacturing test. Mass fabrication, no matter how good the design effort and the fabrication technologies are, generates, in addition to desirable and relatively robust ("strong") products, also some undesirable and unreliable ("weak") devices ("freaks"), which, if shipped to the customer, will most likely fail in the field. BIT is supposed to detect and to eliminate such "freaks". As a result, the final bathtub curve (BTC) of a product that underwent BIT is not expected to contain the infant mortality portion (IMP). In the today's practice BIT, a destructive test for the "freaks" and a non-destructive for the healthy devices, is often run within the framework of, and concurrently with, HALT.

Are the today's practices based on the above accelerated testing adequate? A funny, but quite practical, definition of a sufficiently robust E&P product is that "reliability it is when the customer comes back, not the product". It is well known, however, that E&P products that underwent HALT, passed the existing QTs and survived BIT often prematurely fail in the field. So, what could and should be done differently?

Failure-oriented-accelerated-testing (FOAT), its objective and role

"Say not, "I have found the truth," but rather, "I have found a truth."

Kahlil Gibran, Lebanese artist, poet and writer

One crucial shortcoming of the today's E&P reliability assurance practices is that they are seldom based on good understanding the underlying reliability physics for the particular E&P product, but most importantly, although claim its lifetime, do not suggest a trustworthy effort to quantify it. A possible way to go is to design and conduct FOAT aimed, first of all, at understanding and confirming the anticipated physics of failure, but also on using the FOAT data to predict the operational reliability of the product (last column in Table 1). To do that, FOAT should be geared to an adequate, simple, easy-to-use and physically meaningful predictive model. BAZ (see Appendix B and section 3 below) model can be employed in this capacity.

Predictive modeling has proven for many years to be a highly useful and a highly time- and cost-effective means for understanding the physics of failure in reliability engineering, as well as for designing the most effective accelerated tests. It has been recently suggested that a highly focused (on the most vulnerable material and/or structural element of the design, such as, e.g., solder joint interconnections) and, to an extent possible, highly cost effective FOAT is considered as the experimental basis, the "heart", of the new fruitful, flexible and physically meaningful design-for-reliability concept - PDfR (see next section for details). FOAT should be conducted in addition to, and, in some cases, even instead of, HALT, especially when developing new technologies and for new products, whose operational reliability is, as a rule, unclear and for which no experience is accumulated yet and no best practices nor suitable HALT methodologies are not yet developed. Quantitative estimates based on the FOAT and subsequent PPM might not be perfect, at least at the beginning, but it is still better to pursue this effort rather than to turn a blind eye on the never-zero probability of the product's failure and that the reliability of an E&P product cannot be assured, if this probability is not assessed and made adequate for the given product. If one sets out to understand the physics of failure to create, in accordance with the "principle of practical confidence", a failure-free product, conducting FOAT is imperative to confirm the usage of a particular predictive model, such as BAZ equation, to confirm the physics of failure, and establish the numerical characteristics (activation energy, time constant, sensitivity factors, etc.) of the selected model.

FOAT could be viewed as an extension of HALT, but while HALT is a "black box", i.e., a methodology which can be perceived in terms of its inputs and outputs without clear knowledge of the underlying physics and the likelihood of failure, FOAT is a "transparent box", whose objective is to confirm the use of a particular reliability model. While HALT does not measure (does not quantify) reliability, FOAT does. The major assumption is, of course, that this model should be valid for both accelerated and actual operation conditions. HALT that tries to "kill many unknown birds with one (also not very well known) stone" has demonstrated, however, over the years its ability to improve robustness through a "test-fail-fix" process, in which the applied stresses (stimuli) are somewhat above the specified operating limits. This "somewhat above" is based, however, on an intuition, rather than on a calculation.

There is a general, and, to great extent, justified, perception that HALT is able to precipitate and identify failures of different origins. HALT can be used therefore for "rough tuning" of product's reliability, and FOAT could be employed when "fine tuning" is needed, i.e., when there is a need to quantify, assure and even specify the operational reliability of a product. The FOAT based approach could be viewed as a quantified and reliability physics oriented HALT. The FOAT approach should be geared to a particular technology and application, with consideration of the most likely stressors. FOAT and HALT could be carried out separately, or might be partially combined in a particular AT effort. New products present natural reliability concerns, as well as significant challenges at all the stages of their design, manufacture and use. An appropriate combination of HALT and FOAT efforts could be especially useful for ruggedizing and quantifying reliability of such products. It is always necessary to correctly identify the expected failure modes and mechanisms, and to establish the appropriate stress limits of HALTs and FOATs with an objective to prevent "shifts" in the dominant failure mechanisms. There are many ways of how this could be done. E.g., the test specimens could be mechanically pre-stressed, so that the temperature cycling could be carried out in a more narrow range of temperatures [45]. But a better way seems to be replacement of temperature cycling with a more cost-effective, less time consuming and, most importantly, more physically meaningful accelerated test, such as low-temperature/random-vibrations bias (see section 4.3).

PPM approach and PDfR concept, their roles and applications

"A pinch of probability is worth a pound of perhaps."

James G. Thurber, American writer and cartoonist

Design for reliability (DfR) is, as is known, a set of approaches, methods and best practices that are supposed to be used at the design stage of a product to minimize the risk that the fabricated product might not meet the reliability objectives and customer expectations. When deterministic approach is used, the safety factor (SF) is defined as the ratio of the capacity ("strength") C of the product to the demand ("stress") D. When PDfR approach is considered, the SF can be introduced as the ratio of the mean value of the safety margin SM = Ψ = C - D to its standard deviation . In this analysis, having in mind the application of the BAZ equation, the probability P of non-failure is used as the suitable measure of the product's reliability. Here are several simple PDfR practical examples.

Reliable seal glass bond in a ceramic package design: AT&T ceramic packages fabricated at its Allentown (former "Western Electric") facility in mid-nineties experienced numerous failures during accelerated tests. It has been determined that this happened because the seal/solder glass that bonded two ceramic parts had a higher coefficient of thermal expansion (CTE) than the ceramic lid and substrate, and therefore, when the packages were cooled down from the high manufacturing temperature of about 800-900 ℃ to the room temperature, all the packages cracked. To design a reliable seal we had not only to replace the existing seal glass with a glass material that would have a lower CTE than the ceramics, but, in addition to that, we had to make sure that the interfacial shearing stresses at the ceramics/glass interfaces subjected to compression at low temperatures would be low enough not to crack the seal glass material. Treating the CTE's of the brittle ceramic and brittle glass materials as normally distributed random variables, the following PDfR methodology was developed and applied. No failures were observed in the manufactured packages, designed and manufactured based on the developed methodology. Here is how a reliable seal glass material was selected in a ceramic IC package using this PDfR approach [46].

The maximum interfacial shearing stress in a thin solder glass layer in a ceramic package design can be computed as τmax = khgσmax. Here is the parameter of the interfacial shearing stress, is the axial compliance of the assembly, is its interfacial compliance, are the shear moduli of the ceramics and glass materials, is the maximum normal stress in the mid-portion of the glass layer, ∆t is the change in temperature from the soldering temperature to the low (room or testing) temperature, is the difference in the effective CTEs of the ceramics and the glass, are these coefficients for the given temperature t, t0 is the annealing (zero stress, setup) temperature, and αc,g(t) are the time dependent CTEs for the materials in question. In an approximate analysis one could assume that the axial compliance λ of the assembly is due to the glass only, so that and therefore the maximum normal stress in the solder glass can be evaluated as . While the geometric characteristics of the assembly, the change in temperature and the elastic constants of the materials can be determined with high accuracy, this is not the case for the difference in the CTEs of the brittle materials of the glass and the ceramics. In addition, because of the obvious incentive to minimize this difference, such a mismatch is characterized by a small difference of close and appreciable numbers. This contributes to the uncertainty of the problem and makes PPM necessary. Treating the CTEs of the two materials as normally distributed random variables, we evaluate the probability P that the thermal interfacial shearing stress is compressive (negative) and, in addition, does not exceed a certain allowable level [9]. This stress is proportional to the normal stress in the glass layer, which is, in its turn, proportional to the difference of the CTE of the ceramics and the glass materials. One wants to make sure that the requirement

takes place with a high probability. For normally distributed random variables αc and αg the variable is also normally distributed with the mean value and standard deviation as and , where and are the mean values of the materials' CTEs, andDcandDg are their variances. The probability that the above condition for the Ψ value takes place is

where

is the error (Laplace) function, is the SF for the CTE difference and is the SF for the acceptable level of the allowable stress.

If, e.g., the elastic constants of the solder glass are Eg = 0.66 × 106 kg/cm2 and vg = 0.27, the sealing (fabrication) temperature is 485 ℃ the lowest (testing) temperature is -65 ℃ (so that ∆t = 550 ℃), the computed effective CTE's at this temperature are

and its calculated mean value

Note that if the standard deviations of the materials CTEs were only

Application of extreme value distribution: An E&P device is operated in temperature cycling conditions. Let us assume that the random amplitude of the induced stress, when a single cycle of the random amplitude is applied, is distributed in accordance with the Rayleigh law, so that the probability density function of the random amplitude of the induced thermal stress is

Here Dx is the variance of the distribution. Let us assess the most likely extreme value of the stress amplitude for a large number n of cycles.

The probability distribution density function g(yn) and the probability distribution function G(yn) for the extreme value Yn of the stress amplitude are expressed as follows [28,47]:

and

respectively. Introducing the expression for the function G(yn) into the expression for the function g(yn), the following formula can be obtained for the probability density distribution function g(yn):

where

If the number n is large, the second term in this expression is small compared to the first term and can be omitted. Then we obtain:

As evident from this result, the ratio of the extreme response yn, after n cycles are applied, to the maximum response

Adequate heat sink: Consider a heat-sink whose steady-state operation is determined by the Arrhenius equation (B-2) [28] (Appendix B). The probability of non-failure can be found using the exponential law of reliability as

Solving this equation for the absolute temperature T, we have:

Addressing, e.g., a failure caused by the surface charge accumulation, for which the ratio of the activation energy to the Boltzmann's constant is

Kinetic Multi-Parametric BAZ Equation as the "Heart" of the Pdfr Concept

"Everyone knows that we live in the era of engineering, however, he rarely realizes that literally all our engineering is based on mathematics and physics"

-Bartel Leendert van der Waerden, Dutch mathematician

Electronic package subjected to the combined action of two stressors

The rationale behind the BAZ equation is described in Appendix B. Let us consider, for the sake of simplicity, the action of just two stressors [49,50]: elevated humidity H and elevated voltage V. If the level I* of the leakage current is accepted as the suitable criterion of material/structural failure, then the equation (B-2) can be written as

This equation contains four unknowns: The stress-free activation energy U0, the leakage current sensitivity factor γI, the relative humidity sensitivity factor γH and the elevated voltage sensitivity factor γV. These unknowns can be determined experimentally, by conducting a three-step FOAT.

At the first step one should conduct the test for two temperatures, T1 and T2, while keeping the levels of the relative humidity H and the elevated voltage V unchanged. Assuming a certain level I* of the monitored/measured leakage current as the physically meaningful criterion of failure, recording during the FOAT the percentages P1 and P2 of non-failed samples, and using the above equation for the probability of non-failure, we obtain two equations for the probabilities of non-failure:

where t1 and t2 are the testing times and T1 and T2 are the temperatures, at which the failures were observed. Since the numerators in these equations are the same, the following transcendental equation must be fulfilled:

This equation enables determining the leakage current sensitivity factor γI. At the second step, testing at two humidity levels, H1 and H2, should be conducted for the same temperature and voltage. This enables to determine the relative humidity sensitivity factor γH. Similarly, the voltage sensitivity factor γV can be determined, when testing is conducted at the third step at two voltage levels V1 and V2. The stress-free activation energy U0 can be then evaluated from the above expression for the probability P of non-failure for any consistent combination of the relative humidity, voltage, temperature and time as

If, e.g., after t1 = 35 h of accelerated testing at the temperature of T1 = 60 ℃ = 333 K, voltage V = 600 V and the relative humidity of H = 0.85, 10% of specimens reached the critical level I* = 3.5 μA of the leakage current and, hence, failed, then the corresponding probability of non-failure is P1 = 0.9; and if after t2 = 70 h of testing at the temperature T2 = 85 ℃ = 358 K at the same relative humidity and voltage levels, 20% of the tested samples failed, so that the probability of non-failure is P2 = 0.8, then the factor γI can be found from the equation

Its solution is γI = 4926 -1 (μA)-1, so that γI I* = 17,241 -1. Tests at the second step are conducted for two relative humidity levels H1 and H2 while keeping the temperature and the voltage unchanged. Then the factor γH can be found as:

If, e.g., 5% of the tested specimens failed after t1 = 40 h of testing at the relative humidity of H1 = 0.5, at the voltage V = 600 V and at the temperature T = 60 ℃ = 333 K ( P1 = 0.95), and 10% of the specimens failed ( P2 = 0.9), after t2 = 55 h of testing at this temperature, but at the relative humidity of H2 = 0.85, then the above expression yields: γH = 0.03292 eV. At the third step, when testing at two voltage levels V1 = 600 V and V2 = 1000 V is carried out for the same temperature-humidity bias at T = 85 ℃ = 358 K and H = 0.85, and 10% of the specimens failed after t1 = 40 h ( P1 = 0.9), and 20% of the specimens failed after t2 = 80 h of testing ( P2 = 0.8), then the factor γV for the applied voltage and the predicted stress-free activation energy U0 are as follows:

and

No wonder that the third term in this equation plays the dominant role. It is noteworthy, however, that external loading may also have an effect on the "stress-free" activation energy. The author intends to investigate such a possibility as a future work.

The activation energy U0 in the above numerical example (with the rather tentative, but still realistic, input data) is about U0 = 0.5 eV. This result is consistent with the existing reference information. This information (Bell Labs data) indicates that for failure mechanisms typical for semiconductor devices the stress-free activation energy ranges from 0.3 eV to 0.6 eV, for metallization defects and electro-migration in Al it is about 0.5 eV, for charge loss it is on the order of 0.6 eV, for Si junction defects is 0.8 eV. Other known activation energy values used in E&P reliability engineering assessments are more or less on the same order of magnitude. (See also http://nomtbf.com/2012/08/where-does-0-7ev-come-from). With the above information, the following expression for the probability of non-failure can be obtained:

If, e.g., t = 10 h, H = 0.20, V = 220 V, and the operation temperature is T = 70 ℃ = 343 K, then the probability of non-failure at these conditions is

Clearly, the TTF is not an independent characteristic of the lifetime of a product, but depends on the predicted or specified probability of its failure. If this probability is high, the lifetime of the product is short, and vice versa, if the probability of non-failure is low, the corresponding lifetime is long.

Predicted lifetime of SJIs: Application of Hall's concept

Using the BAZ model (see Appendix B), the probability of non-failure of the SJI experiencing inelastic strains during temperature cycling [48-53] can be sought as

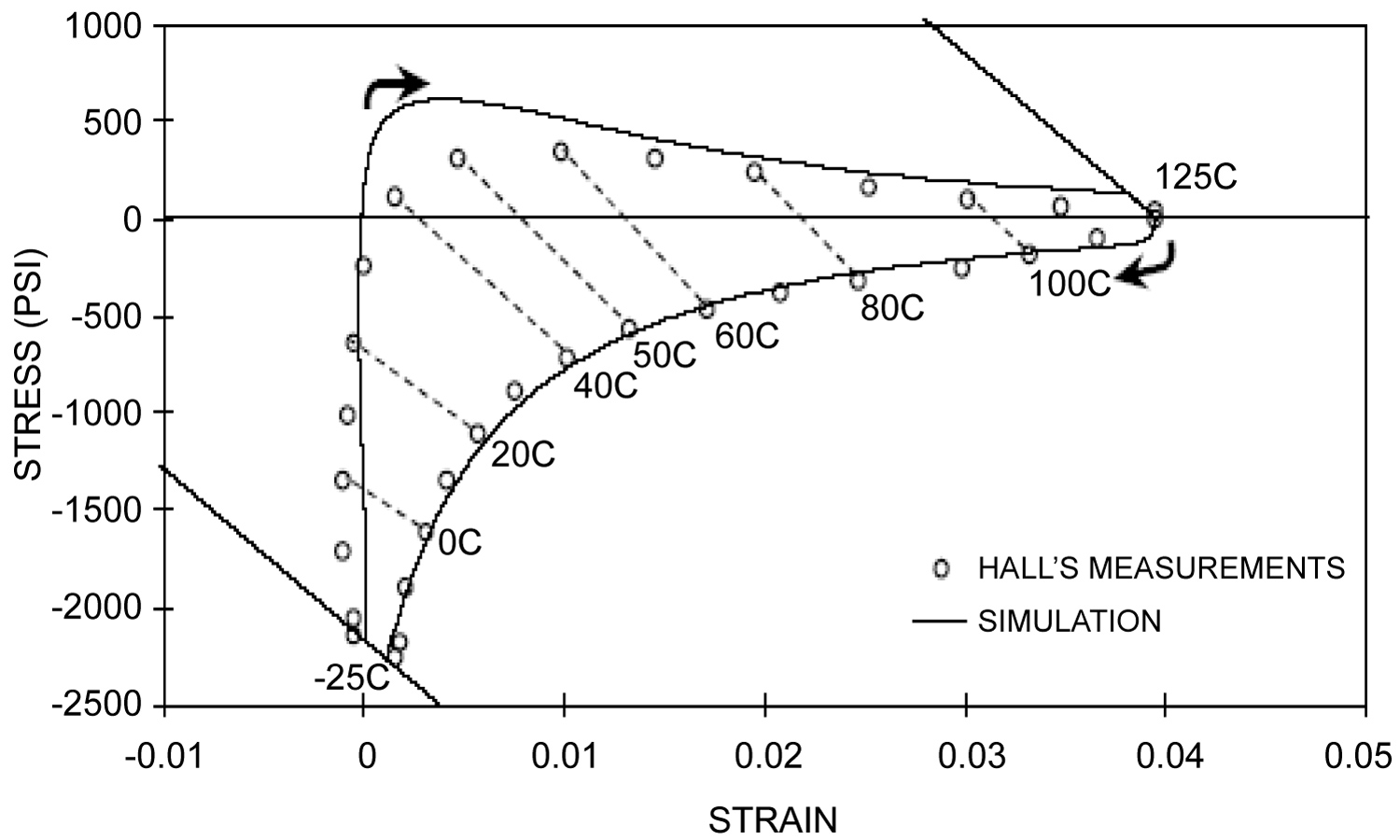

Here U0 is the activation energy and is the characteristic of the propensity of the solder material to fracture, W is the damage caused in the solder material by a single temperature cycle and measured, in accordance with Hall's concept [50-53], by a hysteresis loop area of a single temperature cycle for the strain of interest, T is the absolute temperature (say, the mean temperature of the cycle), n is the number of cycles, k is Boltzmann's constant, t is time, R, Ω is the measured (monitored) electrical resistance at the joint location, and γ is the sensitivity factor for the measured electrical resistance.

The above equation for the probability of non-failure makes physical sense. Indeed, this probability is "one" at the initial moment of time, when the electrical resistance of the solder joint structure is next-to-zero. This probability decreases with time because of the material aging and structural degradation, and even not necessarily only because of temperature cycling leading to crack initiation and propagation. The probability of non-failure is lower for higher electrical resistance (a resistance as high as, say, 450 Ω, can be viewed as an indication of an irreversible mechanical failure of the joint). Materials with higher activation energy U0 are characterized by higher fracture toughness and have a higher probability of non-failure. The increase in the number n of cycles leads to lower effective energy U = U0 - nW, and so does the energy W of a single cycle (Figure 1).

It could be shown (see Appendix B) that the maximum entropy of the above probability distribution takes place at the MTTF expressed as:

Mechanical failure, because of temperature cycling, takes place, when the number n of cycles is

Here

and the predicted MTTF is

According to Hall's concept [51-54] the energy of a single cycle should be evaluated by running a special test, in which appropriate strain gages should be used. Let, e.g., in these tests the area of the hysteresis loop of a single cycle was W = 2.5 × 10-4 eV. Then the stress-free activation energy of the solder material is U0 = nfW = 2000 × 2.5 × 10-4 = 0.5 eV. In order to assess the number of cycles to failure in actual operation conditions one could assume that the temperature range in these conditions is, say, half the accelerated test range, and that the area W of the hysteresis loop is proportional to the temperature range. Then the number of cycles to failure is

If the duration of one cycle is one day, then the predicted TTF is tf = 2000 days = 5.48 years.

Accelerated testing based on temperature cycling should be replaced

It is well known that it is the combination of low temperatures and repetitive dynamic loading that accelerate dramatically the propagation of fatigue cracks, whether elastic or inelastic. A modification of the BAZ model is suggested [48,49] for the evaluation of the lifetime of SJIs experiencing inelastic strains. The experimental basis of the approach is FOAT. The test specimens were subjected to the combined action of low temperatures (not to elevated temperatures, as in the classical Arrhenius model) and random vibrations with the given input energy spectrum of the "white noise" type. The methodology suggested and employed in [48,49] is viewed as a possible, effective and attractive alternative to temperature cycling, which is, as is well known, costly, time- and labor- consuming and often even misleading accelerated testing approach. This is because the temperature range in accelerated temperature cycling has to be substantially wider than what the material will most likely encounter in actual use conditions, and properties of E&P materials are, as is known, temperature sensitive.

As long as inelastic deformations take place, it is assumed that it is these deformations (which typically occur at the peripheral portions of the soldered assembly, where the interfacial stresses are the highest) determine the fatigue lifetime of the solder material, and therefore the state of stress in the elastic mid-portion of the assembly does not have to be accounted for. The roles of the size and stiffness of this mid-portion have to be considered, however, when determining the very existence and establishing the size of the inelastic zones at the peripheral portions of the soldered assemblies. Although the detailed numerical example has been carried out for a ball-grid-array (BGA) design, it is applicable also to highly popular today column-grid-array (CGA) and quad-flat-nolead (QFN) designs, as well as to, actually, any packaging design. It is noteworthy in this connection that it is much easier to avoid inelastic strains in CGA and QFN structures than in the actually tested BGA design.

Random vibrations were considered in the developed methodology as a white noise of the given ratio of the acceleration amplitudes squared to the vibration frequency. Testing was carried out for two PCBs, with surface-mounted packages on them, at the same level (with the mean value of 50 g) of three-dimensional random vibrations. One board was subjected to the low temperature of -20 ℃ and another one - to -100 ℃. It has been found, by preliminary calculations that the solder joints at -20 ℃ will still perform within the elastic range, while the solder joints at -100 ℃ will experience inelastic strains. No failures were detected in the joints of the board tested at -20 ℃, while the joints of the board tested at -100 ℃ failed after several hours of testing.

Predicted "static fatigue" lifetime of an optical silica fiber

BAZ equation can be effectively employed as an attractive replacement of the widely used today purely empirical power law relationship for assessing the "static fatigue" (delayed fracture) lifetime of optical silica fibers [41]. The literature dedicated to delayed fracture of ceramic and silica materials, mostly experimental, is enormous. In the analysis below the combined action of tensile loading and an elevated temperature is considered.

Let, e.g., the following input information is obtained at the FOAT first step for a polyimide coated fiber intended for elevated temperature operations: 1) After t1 = 10 h of testing at the temperature of T1 = 300 ℃ = 573 °K, under the stress of σ = 420 kg/mm2, 10% of the tested specimens failed, so that the probability of non-failure is P1 = 0.9; 2) After t2 = 8.0 h of testing at the temperature of T2 = 350 ℃ = 623 °K under the same stress, 25% of the tested samples failed, so that the probability of non-failure is P2 = 0.75. Forming the equation for the probability of non-failure in accordance with the BAZ equation and introducing notations

can be obtained for the time sencitivity factor γt. With the above input data we obtain:

With the temperature ratio

At the second step testing has been conducted at the stresses of σ1 = 420 kg/mm2 and σ2 = 320 kg/mm2 at T = 350 ℃ = 623 °K and it has been confirmed that 10% of the tested samples under the stress of σ1 = 420 kg/mm2 failed after t1 = 10.0 h of testing, so that P1 = 0.9. The percentage of failed samples tested at the stress level of σ2 = 320 kg/mm2 was 5% after t2 = 24 h of testing, so that P2 = 0.95. Then the ratio

After the sensitivity factors γc and γσ for the time and for the stress are determined, the expression for the ratio of the stress-free activation energy to the thermal energy can be found from the BAZ formula for the probability of non-failure as

If, e.g., the stress σ = σ1 = 320 kg/mm2 is applied for t = 24 h and the acceptable probability of non-failure is, say, P = 0.99 then

This result indicates that the activation energy U0 is determined primarily, as has been expected, by the property of the silica material (second term), but is affected also, to a lesser extent, by the level of the applied stress. The fatigue lifetime, i.e. TTF, can be determined for the acceptable (specified) probability of non-failure as

This formula indicates that when the probability of non-failure is low, the expected lifetime (RUL) could be significant. If, e.g., the applied temperature and stress are T = 325 ℃ = 598 K, and 5.0 kg/mm2, and the acceptable (specified) probability of non-failure is P = 0.8, then the predicted TTF is

If, however, the acceptable probability of non-failure is considerably higher, say, P = 0.99, then the fiber's lifetime is much shorter, only

When

BIT of E&P Products: To BIT or Not to BIT, That's the Question

"We see that the theory of probability is at heart only common sense reduced to calculations: it makes us appreciate with exactitude what reasonable minds feel by a sort of instincts, often without being able to account for it."

Pierre-Simon, Marquis de Laplace, French mathematician and astronomer

BIT [54-58] is an accepted practice in E&P manufacturing for detecting and eliminating early failures ("freaks") in newly fabricated electronic products prior to shipping the "healthy" ones that survived BIT to the customer(s). BIT can be based on temperature cycling, elevated temperatures, voltage, current, humidity, random vibrations, etc., and/or, since the principle of superposition does not work in reliability engineering, - on the appropriate combination of these stressors. BIT is a costly undertaking: early failures are avoided and the infant mortality portion (IMP) of the bathtub curve (BTC) is supposedly eliminated at the expense of the reduced yield. But what is even worse, is that the elevated BIT stressors might not only eliminate "freaks," but could cause permanent damage to the main population of the "healthy" products. This kind of testing should be therefore well understood, thoroughly planned and carefully executed. It is unclear, however, whether BIT is always needed ("to BIT or not to BIT: that's the question"), or to what extent the current practices are adequate and effective.

HALT that is currently employed as a BIT vehicle and, as has been indicated above, is a "black box" that tries "to kill many birds with one stone". HALT is unable therefore to provide any trustworthy information on what this testing does. It remains even unclear what is actually happening during, and as a result of, the HALT-based BIT and how to effectively eliminate "freaks," while minimizing the testing time, reducing BIT cost and avoiding damaging the sound devices. When HALT is relied upon to do the BIT job, it is not even easy to determine whether there exists a decreasing failure rate with time. There is, therefore, an obvious incentive to develop ways, in which the BIT process could be better understood, trustworthily quantified, effectively monitored and possibly even optimized.

Accordingly, in this section some important BIT aspects are addressed for a packaged E&P product comprised of numerous mass-produced components. We intend to shed some quantitative light on the BIT process, and, since nothing is perfect (as has been indicated, the difference between a highly reliable process or a product and an insufficiently reliable one is "merely" in the levels of their never-zero probability of failure), such a quantification should be done on the probabilistic basis. Particularly, we intend to come up with a suitable criterion to answer the fundamental "to BIT or not to BIT" question, and, in addition, if BIT is decided upon, - to find a way to quantify its outcome using our physically meaningful and flexible BAZ model.

In the analysis below the role and significance of the following important factors that affect the testing time and the stress level are addressed: the random statistical failure rate (SFR) of mass-produced components that the product of interest is comprised of; the way to assess, from the highly focused and highly cost-effective FOAT, the activation energy of the "freak" population of the manufacturing technology of interest; the role of the applied stressor(s); and, most importantly, the probabilities of the "freak" failures depending on the duration of the BIT loading, and a way to assess, using BAZ equation, these probabilities as functions of the duration and level of the BIT, as well as, as will be shown, the variance of the random SFR of the mass-produced components that the product of interest is comprised of. It is shown that the BTC based time-derivative of the failure rate at the initial moment of time (at the beginning of the IMP portion of the BTC) can be considered as a suitable criterion of whether BIT for a packaged IC device should be or does not have to be conducted. It is shown also that this criterion is, in effect, the variance of the random SFR of the mass-produced components that the manufacturer of the given product received from numerous vendors, whose commitments to the reliability of their mass-produced components are unknown, and therefore the random SFR of these components might vary significantly, from zero to infinity. Based on the developed general formula for the non-random SFR of a product comprised of such components, the solution for the case of normally distributed random SFR of the constituent components has been obtained. This information enables answering the "to BIT or not to BIT" question in electronics manufacturing. If BIT is decided upon, BAZ model can be employed for the assessment of its required duration and level. Our analyses have to do with the role and significance of important factors that affect the testing time and stress level: the random SFR of mass-produced components that the product of interest is comprised of; the way to assess, from the highly focused and highly cost effective FOAT, the activation energy of the "freak" population; the role of the applied stressor(s); and, most importantly, - the probabilities of the "freak" failures depending on the duration of the BIT effort. These factors should be considered when there is an intent to quantify and, eventually, to optimize the BIT's procedure. This fundamental question is addressed using two mutually complementary and independent analyses: 1) The analysis of the configuration of the IMP of a BTC obtained for a more or less well established manufacturing technology of interest; and 2) The analysis of the role of the random SFR of the mass-produced components that the product of interest is comprised of.

The desirable steady-state portion of the BTC commences at the BIT's end as a result of the interaction of two major irreversible time-dependent processes: The "favorable" statistical process that results in a decreasing failure rate with time, and the "unfavorable" physics-of-failure-related process resulting in an increasing failure rate. The first process dominates at the IMP of the BTC and is considered here. The IMP of a typical BTC, the "reliability passport" of a mass-produced electronic product using a more or less well established manufacturing technology, can be approximated as

Here λ0 is BTC's steady-state ordinate, λ1 is its initial (highest) value at the beginning of the IMP, t1 is the IMP duration, the exponent n1 is

If this derivative is zero or next-to-zero, this means that the IMP of the BTC is parallel to the time axis (so that there is, in effect, no IMP at all), that no BIT is needed to eliminate this portion, and "not to burn-in" is the answer to the basic question: the initial value λ1 of the BTC is not different from its steady-state λ0 value. What is less obvious is that the same result takes place for

In this case, and this seems to be the case, when the BIT is mostly needed. It has been found that the expression for the non-random time dependent SFR

Can be obtained from the probability density distribution function f(t) for the random SFR λ for the components obtained from the vendors. When this rate is normally distributed,

i.e., the above formula yields:

The "time function" φ[τ(t)] depends on the dimensionless "physical" (effective) time

It can be shown that the derivative φ'(τ) at the initial moment of time (t = 0) is equal to -1.0, so that

As to the use of the kinetic BAZ model in the problem in question, it suggests a simple, easy-to-use, highly flexible and physically meaningful way to evaluate of the probability of failure of a material or a device after the given time in testing or operation at the given temperature and under the given stress or stressors. Using this model, the probability of non-failure during the BIT can be sought as

Here D is the variance of the random SFR of the mass-produced components, I is the measured/monitored signal (such as, e.g., leakage current, whose agreed-upon high value I* is considered as an indication of failure; or an elevated electrical resistance, particularly suitable for solder joint interconnections), t is time, σ is the "external" stressor, U0 is the activation energy (unlike in the original BAZ model, this energy may or may not be affected by the level of the external stressor), T is the absolute temperature, γσ is the stress sensitivity factor for the applied stress and γt is the time/variance sensitivity factor. The above distribution makes physical sense. Indeed, the probability P of non-failure decreases with an increase in the variance D, in the time t, in the level I* of the leakage current at failure and in the temperature T, and increases with an increase in the activation energy U0. As has been shown, the maxima of the entropy and the probability of non-failure take place at the moment of time

Accepted in the BAZ model as the MTTF. There are three unknowns in this expression: the product ρ = γtD of the time related stress-sensitivity factor γσ and the variance D, and the activation energy U0. These unknowns, as has been demonstrated in previous examples, could be determined from a two-step FOAT. At the first step testing should be carried out for two temperatures, T1 and T2, but for the same effective activation energy U = U0 - γσσ. Then the relationships

For the measured probabilities of non-failure can be obtained. Here t1 ,2 are the corresponding times at which the failures have been detected and I* is the agreed upon the leakage current at failure. Since the numerator U = U0 - γσσ in these relationships is kept the same in the conducted tests, the amount ρ = γtD can be found as

Where the notations

If, however, the external stress is not a thermal stress, then the temperatures at the second step tests should preferably be kept the same. Then the ρ value will not affect the factor γσ, which could be found as

Where T is the testing temperature. Finally, after the product ρ and the factor γσ are determined, the activation energy U0 can be determined as

The TTF can be obviously determined as TTF = MTTF(-lnP), where the MTTF has been defined above.

Let, e.g., the following data were obtained at the first step of FOAT: 1) After t1 = 14 h of testing at the temperature of T1 = 60 ℃ = 333° K, 90% of the tested devices reached the critical level of the leakage current of I* = 3.5 μA and, hence, failed, so that the recorded probability of non-failure is P1 = 0.1; the applied stress is elevated voltage σ1 = 380 V; 2) After t2 = 28 h of testing at the temperature of T2 = 85 ℃ = 358° K, 95% of the samples failed, so that the recorded probability of non-failure is P2 = 0.05. The applied stress is still elevated voltage σ1 = 380 V. Then the parameters

and

With the temperature ratio

At the second step of FOAT one can use, without conducting additional testing, the above information from the first step, its duration and outcome, and let the second step of testing has shown that after t2 = 36 h of testing at the same temperature of T = 60 ℃ = 333° K, 98% of the tested samples failed, so that the predicted probability of non-failure is P2 = 0.02. If the stress σ2 is the elevated voltage σ2 = 220 V, then the parameter n2 becomes

and the sensitivity factor γσ for the applied stress is

The zero-stress activation energies calculated for the above parameters n1 and n2 and the stresses σ1 and σ2 is

To make sure that there was no calculation error, the zero-stress activation energy can be found also as

No wonder that these values are considerably lower than the activation energies of "healthy" products. Many manufacturers consider as a sort of "rule of thumb" that the level of 0.7eV can be used as an appropriate tentative number for the activation energy of healthy electronic products. In this connection it should be indicated that when the BIT process is monitored and the activation energy U0 is being continuously calculated based on the number of the failed devices, the BIT process should be terminated, when the calculations, based on the observed and recorded FOAT data, indicate that the stress-free activation energy U0 starts to increase. The MTTF can be computed as

The TTF, however, depends on the probability of non-failure. Its values calculated as TTF = MTTF × (lnP) are shown in Table 2.

Clearly, the probabilities of non-failure for successful BITs should be low enough. It is clear also that the BIT process should be terminated when the calculated probabilities of non-failure and the activation energy U0 start rapidly increasing. Although our BIT analyses do not suggest any straightforward and complete way of how to optimize BIT, they nonetheless shed useful and insightful light on the significance of some important factors that affect the BIT's need, and, if decided upon, - its required time and stress level for a packaged product comprised of mass-produced components.

Adequate Trust is an Important HCF Constituent

"If a man will begin with certainties he will end with doubts; but if he will be content to begin with doubts, he shall end in certainties".

Francis Bacon, English philosopher and statesman, ‘The Advancement of Learning'

Since Shakespearian "love all, trust a few" and "don't trust the person who has broken faith once" and to the today's ladygaga's "trust is like a mirror, you can fix it if it's broken, but you can still see the crack in that mother f*cker's reflection", the importance of human-human trust was addressed by numerous writers, politicians and psychologists in connection with the role of the human factor in making a particular engineering undertaking successful and safe [59-66]. It was the 19th century South Dakota politician and clergyman Frank Craine who seems to be the first who indicated the importance of an adequate trust in human relationships. Here are a couple of his quotes: "You may be deceived if you trust too much, but you will live in torment unless you trust enough"; "We're never so vulnerable than when we trust someone - but paradoxically, if we cannot trust, neither can we find love or joy"; "Great companies that build an enduring brand have an emotional relationship with customers that has no barrier. And that emotional relationship is on the most important characteristic, which is trust". Hoff and Bashir [61] considered the role of trust in automation. Madhavan and Wiegmann [62] drew attention at the importance of trust in engineering and, particularly, at similarities and differences between human-human and human-automation trust. Rosenfeld and Kraus [63] addressed human decision making and its consequences, with consideration of the role of trust. Chatzi, Wayne, Bates and Murray [64] provided a comprehensive review of trust considerations in aviation maintenance practice. The analysis in this section [65] is, in a way, an extension and a generalization of the recent Kaindl and Svetinovic [66] publication, and addresses some important aspects of the human-in-the-loop (HITL) problem for safety-critical missions and extraordinary situations, as well as in engineering technologies. It is argued that the role and significance of trust can and should be quantified when preparing such missions. The author is convinced that otherwise the concept of an adequate trust simply cannot be effectively addressed and included into an engineering technology, design methodology or a human activity, when there is a need to assure a successful and safe outcome of a particular engineering undertaking or an aerospace or a military mission. Since nobody and nothing is perfect, and the probability-of-failure is never zero, such a quantification should be done on the probabilistic basis. Adequate trust is an important human quality and a critical constituent of the human capacity factor (HCF) [67-70]. When evaluating the outcome of a HITL related mission or an off-normal situation, the role of the HCF should always be considered and even quantified vs. the level of the mental workload (MWL). While the notion of the MWL is well established in aerospace and other areas of human psychology and is reasonably well understood and investigated (see, e.g., [71-89]), the importance of the HCF has been emphasized by the author of this paper and introduced only several years ago. The rationale behind such an introduction is that it is not the absolute MWL level, but the relative levels of the MWL and HCF that determine, in addition to other critical factors, the probability of the human non-failure in a particular off-normal situation of interest. The majority of pilots with an ordinary HCF would most likely have failed in the "miracle-on-the-Hudson" situation, while "Sully", with his extraordinarily high anticipated HCF, has not.

HCF includes, but might not be limited to, the following human qualities that enable a professional to successfully cope, when necessary, with an elevated off-normal MWL: Age, fitness, health; personality type; psychological suitability for a particular task; professional experience , qualifications, and intelligence; education, both special and general; relevant capabilities and skills; level, quality and timeliness of training; performance sustainability (consistency, predictability); independent thinking and independent acting, when necessary; ability to concentrate; ability to anticipate; ability to withstand fatigue in general and, when driving a car, drowsiness (this ability might be considerably different depending on whether it is "old fashioned" manual or automated driving (AD) [90]; self control and ability to "act in cold blood" in hazardous and even life threatening situations; mature (realistic) thinking; ability to operate effectively under time pressure; ability to operate effectively, when necessary, in a tireless fashion, for a long period of time (tolerance to stress); ability to make well substantiated decisions in a short period of time; team-player attitude, when necessary; ability and willingness to follow orders, when necessary; swiftness in reaction, when necessary; adequate trust; and ability to maintain the optimal level of physiological arousal. These and other qualities are certainly of different importance in different HITL situations.

HCF could be time-dependent.

It is clear that different individuals possess these qualities in different degrees. Captain Chesley Sullenberger ("Sully"), the hero of the famous miracle-on-the-Hudson event did indeed possess an outstanding HCF. As a matter of fact the "miracle" was not that he managed to ditch the aircraft successfully in an extraordinary situation, but that an individual like Captain Sullenberger, and not someone like a pilot with a regular HCF, turned out behind the wheel in such a situation. As far as the quality of an adequate trust is concerned, Captain Sullenberger certainly "avoided over-trust" in the ability of the first officer, who ran the aircraft when it took off La Guardia airport, to successfully cope with the situation, when the aircraft struck a flock of Canada Geese and lost engine power. Captain Sullenberger took over the controls, while the first officer began going through the emergency procedures checklist in an attempt to find information on how to restart the engines and what to do, with the help of the air traffic controllers at LaGuardia and Teterboro airports, to bring the aircraft to these airports and hopefully to land it there safely. What is even more important, is that Captain Sullenberger also effectively and successfully "avoided under-trust" in his own skills, abilities and extensive experience that would enable him to successfully cope with the situation: 57-year-old Captain Sully was a former fighter pilot, a safety expert, a professional development instructor and a glider pilot. That was the rare case when "team work" (such as, say, sharing his "wisdom" and intent with flight controllers at LaGuardia and Teterboro) was not the right thing to pursue until the very moment of ditching. Captain Sully had trust in the aircraft structure that would be able to successfully withstand the slam of the water during ditching and, in addition, would enable slow enough flooding after ditching. It turned out that the crew did not activate the "ditch switch" during the incident, but Capt. Sullenberger later noted that it probably would not have been effective anyway, since the water impact tore holes in the plane's fuselage that were much larger than the openings sealed by the switch. Captain Sully had trust in the aircraft safety equipment that was carried in excess of that mandated for the flight. He also had trust in the outstanding cooperation and excellent cockpit resource management among the flight crew who trusted their captain and exhibited outstanding team work (that is where such work was needed, was useful and successful) during landing and the rescue operation. The area where the aircraft landed was the one, where fast response from and effective help of the various ferry operators located near the USS Intrepid ship/museum, and the ability of the rescue team to provide timely and effective help was the one that Capt. "Sully" could expect and rely upon, and he actually did. The environmental conditions and, particularly, the visibility was excellent and was an important contributing factor to the survivability of the accident. All these trust related factors played an important role in Captain Sullenberger's ability to successfully ditch the aircraft and save lives. As is known, the crew was later awarded the Master's Medal of the Guild of Air Pilots and Air Navigators for successful "emergency ditching and evacuation, with the loss of no lives… a heroic and unique aviation achievement…the most successful ditching in aviation history. "National Transportation Safety Board (NTSB) Member Kitty Higgins, the principal spokesperson for the on-scene investigation, said at a press conference the day after the accident that it" has to go down [as] the most successful ditching in aviation history… These people knew what they were supposed to do and they did it and as a result, nobody lost their life". The flight crew, and, first of all, Captain Sullenberger, were widely praised for their actions during the incident, notably by New York City Mayor (Michael Bloomberg at that time) and New York State Governor David Paterson, who opined, "We had a Miracle on 34th Street. I believe now we have had a Miracle on the Hudson." Outgoing U.S. President George W. Bush said he was "inspired by the skill and heroism of the flight crew", and he also praised the emergency responders and volunteers. Then President-elect Barack Obama said that everyone was proud of Sullenberger's "heroic and graceful job in landing the damaged aircraft", and thanked the A320's crew.

The double-exponential probability density function (DEPDF) [70] for the random HCF has been revisited in the addressed adequate trust problem with an intent to show that the entropy of this distribution, when applied to the trustee, can be viewed as an appropriate quantitative characteristic of the propensity of a human to make a decision influenced by an under-trust or an over-trust. DEPDF's entropy for the human non-failure sheds quantitative light on why under-trust and over-trust should be avoided. A suitable modification of the DEPDF for the human non-failure, whether it is the performer (decision maker) or the trustee, could be assumed in the following simple form

Where P is the probability of non-failure, t is time, F is the HCF, G is the MWL, and γ is the sensitivity factor for the time.

The expression for the probability of non-failure P makes physical sense. Indeed, the probability P of human non-failure, when fulfilling a certain task, decreases with an increase in time and increases with an increase in the ratio of the HCF to the mental workload (MWL). At the initial moment of time (t = 0) the probability of non-failure is P = 1 and exponentially decreases with time, especially for low F/G ratios. For very large HCF-to-the-MWL ratios the probability P of non-failure is also significant, even for not-very short operation times. The above expression, depending on a particular task and application, could be applied either to the performer (the decision maker) or to the trustee. The trustee could be a human, a technology, a concept, an existing best practice, etc.

The ergonomics underlying the above distribution could be seen from the time derivative

The role of the human factor (HF) in various, mostly aerospace, missions and situations, was addressed in numerous publications (see, e.g., [68-89]). When PPM analyses are conducted with an intent to assess the probability of non-failure, considering the role of the HCF vs. his/her MWL, a suitable model is DEPDF based one. This model is similar to the BAZ model, which also leads to a double-exponential relationship, but does not contain temperature as an important parameter affecting the TTF. Like in the BAZ model, the necessary parameters of the DEPDF model can be obtained for the given HCF and MWL from the appropriately designed and conducted FOAT.

Let us show how this could be done, using as an example, the role of the HF in aviation. Flight simulator could be employed as an appropriate FOAT vehicle to quantify, on the probabilistic basis, the required level of the HCF with respect to the expected MWL when fulfilling a particular mission. When designing and conducting FOAT aimed at the evaluation of the sensitivity parameter γ in the distribution for the probability of non-failure, a certain MWL factor I (electro-cardiac activity, respiration, skin-based measures, blood pressure, ocular measurements, brain measures, etc.) should be monitored and measured on the continuous basis until its agreed-upon high value I*, viewed as an indication of a human failure, is reached. Then the above DEPDF distribution for the probability of non-failure could be written as

Bringing together a group of more or less equally and highly qualified individuals, one should proceed from the fact that the HCF is a characteristic that remains more or less unchanged for these individuals during the relatively short time of the FOAT. The MWL, on the other hand, is a short-term characteristic that can be tailored, in many ways, depending on the anticipated MWL conditions. From the above expression we have:

Where

The HCF of the individuals that underwent the accelerated testing can be determined as:

Let, e.g., the same group of individuals was tested at two different MWL levels, G1 and G2, until failure (whatever its definition and nature might be), and let the MWL ratio was

and the following values for the sensitivity factor and the required HCF-to-MWL ratio can be obtained:

The calculated required HCF-to-MWL ratios

for different probabilities of non-failure and for different times are shown in Table 3.

As evident from the calculated data, the level of the HCF in this example should exceed considerably the level of the MWL, so that a high enough value of the probability of human-non-failure is achieved, especially for long operation times. It is concluded that trust is an important HCF quality and should be included into the list of such qualities for a particular "human-in-the-loop" task. The HCF should be evaluated vs. MWL, when there is a need to assure a successful and safe outcome of a particular aerospace or military mission, or when considering the role of a HF in a non-vehicular engineering system. The DEPDF for the random HCF is revisited, and it is shown particularly that its entropy can be viewed as an appropriate quantitative characteristic of the propensity of a human to an under-trust or an over-trust judgment and, as the consequence of that, to an erroneous decision making or to a performance error.

PPM of an Emergency-Stopping Situation in AD or on a RR

"Education is man's going forward from cocksure ignorance to thoughtful uncertainty."

Kenneth G. Johnson, American high-school English teacher

Automotive engineering is entering a new frontier-the AD era [91-98]. Level 3 of driving automation, conditional automation, as defined by SAE [96], considers a vehicle controlled autonomously by the system, but only under ‘specific conditions'. These conditions include speed control, steering, and braking, as well as monitoring the environment. When/if, however, such conditions are no more met, and monitoring the environment determines unexpected or uncontrollable situation, the system is supposed to hand over control to the human operator. The new AD frontier requires, on one hand, the development of advanced navigation equipment and instrumentation, and, first of all, an effective and reliable AD system itself, but also numerous cameras, radars, LiDARs ("optical radars") and other electro-optic means with fast and effective processing capabilities. In addition, special qualifications and attitudes are required of the key HITL "component" of the system -the driver. It is he/she who is ultimately responsible for the vehicle and passengers safety, and should effectively interact with the system on a permanent basis. It is imperative that the driver of an AD vehicle receives special training before operating such vehicle, and this requirement should be reflected in his/hers driver license.

While one has to admit that at present "we do not even know what we do not know" [91] about the challenges and pitfalls associated with the use of AD systems, we do know, however, that the HITL role will hardly change in the foreseeable future, when more advanced AD equipment will be developed and installed. What is also clear is that the safe outcome of an off-normal AD related situation could not be assured, if it is not quantified, and that, because of various inevitable unpredictable intervening uncertainties, such quantification should be done on the probabilistic basis. In effect, the difference between a highly reliable and an insufficiently reliable performance of a system or a human is "merely" the difference in the never-zero probabilities of their failure. Accordingly, PAM is employed in this analysis to predict the likelihood of a possible collision, when the system and/or the driver (the significance of this important distinction has still to be determined and decided upon [98]) suddenly detect a steadfast obstacle, and when the only way to avoid collision is to decelerate the vehicle using brakes. We would like to emphasize that PPM should always be considered to complement computer simulations in various HITL and AD related problems. These two modeling approaches are usually based on different assumptions and use different evaluation techniques, and if the results obtained using these two different approaches are in a reasonably good agreement, then there is a reason to believe that the obtained data are sufficiently accurate and trustworthy.

It has been demonstrated, mostly in application to the aerospace domain, how PPM could be effectively employed, when the reliability of the equipment (instrumentation), both its hard- and software, and human performance contribute jointly to the outcome of a vehicular mission or an extraordinary situation. One of the developed models, the convolution model, is brought here "down to earth", i.e., extended, with appropriate modifications, for the AD situation, when there is a need to avoid collision. The automotive vehicle environment might be much less forgiving than for an aircraft: While slight deviations in aircraft altitude, speed, or human actions are often tolerable without immediate consequences, a motor vehicle is likely to have much tighter control requirements for avoiding collision than an aircraft. We would like to point out also that the driver of an AD vehicle should possess special "professional" qualities associated with his/her need to interact with an AD system. These qualities should be much higher and more specific than the today's amateur driver possesses.

The pre-deceleration time (that includes decision-making time, pre-braking time and to some extent also brake-adjusting time) and the corresponding distance (σ0) characterize, in the extraordinary situation in question, when compared to the deceleration time and distance (σ1), the role of the HCF. Indeed, if this factor is large (the driver reacts fast and effectively), the ratio

When a deterministic approach is used to quantify the role of the major factors affecting the safety of an outcome of a possible collision situation, when an obstacle is suddenly detected in front of the moving vehicle, the role of the HF could be quantified by the ratio

Pre-deceleration time that is characterized by the constant speed of the vehicle includes: 1) Decision-making time, i.e., the time that the system and/or the driver need to decide that/if the driver has to intervene and to take over the control of the vehicle; 2) Pre-braking time that the driver needs to make his/her decision on pushing the brakes and, 3) Brake-adjusting time needed to adjust the brakes, when interacting with the vehicle's anti-lock (anti-skid) braking system; although both the human and the vehicle performance affect this third period of time and the corresponding distance, it can be conservatively assumed that the brake-adjusting time is simply part of the pre-deceleration time. Thus, two major critical periods could be distinguished in an approximate PPM of a possible collision situation:

1) The pre-deceleration time counted from the moment of time, when the steadfast obstacle was detected, until the time when the vehicle starts to decelerate. This time depends on driver experience, age, fatigue and other relevant items of his/her HCF. It could be assumed that during this time the vehicle keeps moving with its initial speed V0 and that it is this time that characterizes the performance of the driver. If, e.g., the vehicle's initial speed is V0 = 10 m/s nd the pre-deceleration time is T0 = 3.0 s, then the corresponding distance is as follows: S0 = V0T0 = 30 m; and 2) The deceleration time that can be evaluated as

The total stopping distance is therefore S = S0 + S1 = 55 m, so that the contributions of the two main constituents of this distance are comparable in this example. Note that, as it follows from the formula

In reality none of the above times and the corresponding distances are known, or could be, or even will ever be evaluated, with sufficient certainty, and there is an obvious incentive therefore that a probabilistic approach is employed to assess the likelihood of an accident. To some extent, our predictive model is similar to the convolution model applied in the helicopter-landing-ship situation [85], where, however, random times, and not random distances, were considered. If the probability

It is natural to assume that the random times T0 and T1, corresponding to the distances S0 and S1, are distributed in accordance with the Rayleigh law. Indeed, both these times cannot be zero, but cannot be very long either. In addition, in an emergency situation, short time values are more likely than long time values, and because of that, their probability density distribution functions should be heavily skewed in the direction of short times. The Rayleigh distribution in possesses these physically important properties and is accepted in our analysis. The probability PS that the sum s = s0 + s1 of the random variables S0 and S1 exceeds a certain level Ŝ is expressed by the distribution (A-1) in the Appendix A, and the computed probabilities PS of collision are shown in Table 4. The calculated data indicate particularly that the probability of collision for the input data used in the above deterministic example, where the pre-deceleration distance was σ0 = S0 = 30 m, the deceleration distance was σ1 = S1 = 25 m, and the dimensionless parameters were

As evident from Table 4, the probability of collision will be considerably lower for larger available distances Ŝ. The calculated data clearly indicate that the available distance plays the major role in avoiding collision, while the HF is less important. It is noteworthy in this connection that the Rayleigh distribution is an extremely conservative one. Data that are less conservative and, perhaps, more realistic could be obtained by using, say, Weibull distribution for the random times and distances.

Note that the decrease in the probabilities of collision (which is, in our approach, the probability PS that the available distance Ŝ to the obstacle is exceeded) for high

The Table 4 data are based on the convolution equation

for the probability Ps of collision. The PDFs

are the PDFs of the random variables S0 and S1, σ0,1 are the modes (most likely values) of these variables,

are their means and standard deviations, respectively,

are the dimensionless parameters of the convolution of the two PDFs f(s0,1), and

is the Laplace function (probability integral).