Pilot Test of an Evidence-Based Clinical Intervention to Reduce Rural Preterm Births using Mobile Technology and Community Health Worker Support

Abstract

Preterm birth is a priority health problem in the U.S. One in ten babies in the U.S. is born before 37 weeks gestation, which results in lengthy hospitalizations and sometimes lifelong health problems. Obtaining adequate prenatal healthcare is essential for reducing prematurity. However, access to primary care is a challenge for many American women who reside in rural communities where there are significant provider shortages, travel distances, and limited public health support services. Mobile technology and community health workers (CHW) are two resources that have shown potential for reducing these rural access barriers. The purpose of this study was to pilot test an evidence-based clinical intervention using concierge mobile technology with CHW reinforcement to reduce preterm birth. The study objectives were to examine the intervention for its feasibility, preliminary effectiveness, and costs in preparation for a larger study. This was a quasi-experimental two-group design. Participants (N = 114) were recruited from a rural Midwestern state and were in the study from enrollment through 36 weeks. Intervention participants received usual medical care plus a smart phone loaded with concierge mobile technology. They also had weekly CHW contact. Control participants received usual medical care and a prenatal information packet. Results showed the intervention was feasible based on acceptance and fidelity. It also showed potential for leveraging patient activation. Return on investment analysis showed the intervention was cost-effective and although those in the intention group had improved birth outcomes, the differences were not statistically different than controls. Enrollment and attrition were challenges and the authors make recommendations to improve the processes for a larger study.

Introduction

Prematurity exacts a significant social and economic toll in the U.S., and is a priority public health problem [1]. Each year, 1 in 10 babies is born prematurely before 37 weeks gestation [2,3]. Premature/low birth weight infants can experience complicated health problems that often require lengthy hospitalizations costing more than 10 times that of uncomplicated newborns [4]. Preterm can also result in life-long problems such as intellectual disabilities, learning delays, vision or hearing loss, behavior problems and neurological disorders [5].

Background

An essential element in reducing preterm birth is ensuring adequate prenatal health care. For women living in rural communities, accessing early and regular prenatal care be challenging because of provider shortages (i.e., physicians, nurse practitioners, physician assistants), long travel distances, and limited public health or support services [6,7]. Mobile technology and community health workers (CHW) are two resources that show potential for reducing rural access barriers by extending provider outreach across distances and enhancing patient communications and education [8-11].

Mobile technology has been used successfully as a clinical intervention for weight loss, sexual health promotion, and smoking cessation. There are also publicly available prenatal healthcare websites (e.g., Text4Baby) that provide general information. Reports indicate that women accessing prenatal websites may feel more confident in their preparation for motherhood [12-14]. Mobile technology is widely available. More than 67% of rural Americans own smart phones [15,16], and 62% use their phones to get information about health conditions [17]. The problem has been that not all mobile technology users are well-informed about accessing evidence-based health information [18].

CHWs are another emerging healthcare resource for underserved and rural communities. CHWs typically are indigenous to the community, require minimal training, can provide culturally appropriate health counseling and education, and help patients connect with health services or providers, as well as to necessary social support services [9,19-21].

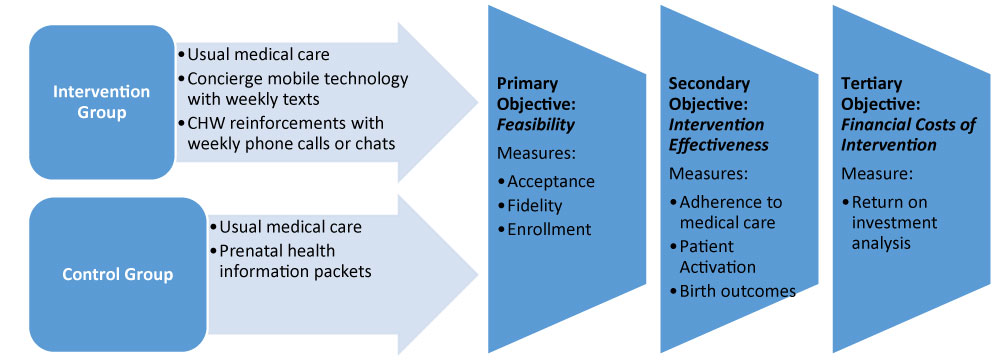

This article describes the pilot/feasibility testing of an evidence-based clinical intervention using concierge mobile technology and CHW reinforcement to reduce rural preterm births. The conceptual framework was based on Social Cognitive Theory [22] that posits improved knowledge and access to information can change one's health beliefs and behaviors. Consistent with the purpose of pilot/feasibility studies, our primary objective was to test processes needed for a larger study based on intervention acceptance, enrollment, and fidelity [23-26]. Our secondary objective was to explore preliminary intervention effectiveness as measured by adherence to medical care, patient activation, and birth outcomes (i.e., gestation and birth weight). The third objective was to examine financial costs of the intervention extending 30 days post-delivery and based on return on investment analysis.

Materials and Methods

Design, Sample, and Procedures

The study was approved by the institutional review board of an academic medical center. This 15-month pilot feasibility used a two-group quasi-experimental design (Figure 1). Participants were in the study from enrollment to 36-weeks gestation. Intervention participants received usual medical care plus a smart phone pre-loaded with a HIPAA-compliant concierge messaging platform. Concierge aspects of the mobile technology included personalized messaging to each participant based on their name, trimester, assessed health risks (i.e., smoking, alcohol or substance use, and BMI), and preferred language (English or Spanish). Weekly text messages contained evidence-based prenatal self-care information and deep-dive hyperlinks. The bi-lingual CHW contacted participants weekly via short message service (SMS) and/or voice phone for appointment reminders, social service assistance, and to discuss weekly text messages or answer questions. Control participants received usual medical care and prenatal informational packets.

The setting for this study was two rural counties in Nebraska-a predominately rural Midwestern state in the U.S. We enrolled 114 participants over a nine-month period. The sample size was based on the pragmatics of recruitment (e.g., budgetary constraints, patient flow) and the necessities for examining feasibility [25]. Clinic nurses from five rural primary care clinics and two rural community service agencies screened patients for eligibility and made referrals to the study coordinator. Inclusion criteria were 1st or 2nd trimester of pregnancy, Spanish or English speaking, and an assigned primary care provider. Those requiring more than usual medical care (i.e., chronic hypertension, heart disease, uncontrolled diabetes) or significantly predisposed to preterm birth (i.e., multiple fetuses or history of preterm birth) were excluded. The CHW phoned eligible patients that had been referred to the study within a few days of the clinic visit to schedule the home visit for consent and enrollment. We used a quasi-experimental design that did not involve randomization due to time limitation on the mobile technology contract. Thus, we first recruited our intervention participants to ensure their completion of the intervention before the service ended.

Measures

We collected demographics, health risk data (smoking, alcohol/substance use, BMI) and insurance data at the time of enrollment. For objective one (i.e., feasibility), we administered the Client Satisfaction Questionnaire (CSQ-8) [27] at 36 weeks to measure intervention acceptance. We examined enrollment by measuring recruitment efficiency and attrition. Intervention fidelity was measured by the total text message hyperlink hits and total phone contacts or chats with the CHW. These data were collected through our mobile technology vendor's internal database analytics.

For study objective two (i.e., intervention effectiveness), we used the ratio of kept-to-scheduled appointments to measure adherence to medical care. These data were collected from participants' clinic records. We administered the Patient Activation Measure (PAM) [28] at 36-weeks as a retrospective pretest to avoid response-shift bias [29-31]. Birth outcomes for preterm (< 37 weeks) and low birth weight(< 2500 grams) came from hospital delivery notes.

For study objective three (financial costs), we used return on investment analysis. Mother and infant total charges were collected from hospital billing records and financial departments. Data also included primary payer, primary payment, secondary payer, secondary payment, patient payments, primary diagnosis, and diagnosis description.

Data Analysis

Chi-square (χ2) and likelihood ratio tests (LRT) described group differences in demographics, health risks, and insurance. T-tests analyzed differences in BMI, age, and weeks pregnant. Mann-Whitney U tests compared birth weight and gestational weeks between groups. The LRT tested group differences on 1) Low versus normal birth weight and 2) Preterm versus full term gestation. ROI related intervention costs (hospital charge data) to expected monetary benefits (difference in average inpatient costs between groups). Intervention costs included technology service ($3.00/participant/month), CHW time/salary ($251.25/participant); and smart phones use ($20.00/participant/month).

Results

Of 114 consented participants, n = 37 had missing birth outcome data leaving a final sample of N = 77 (n = 40/68 intervention [59%]; n = 37/46 control [80%]). Overall, the final sample was mostly white race (97.3%); married with spouse present (62.7%); insured (51.3% private, 39.5% government); and employed (50% full-time, 13.2% part-time). There were 34.7% with more than a high school diploma, and 42.5% were Hispanic/Latino ethnicity. Most were non-smokers (94.7%) and only one self-reported 30-day alcohol use. None reported 30-day substance use but one tested positive on a urine drug screen. There were 32.9% overweight and 25.8% categorized as obese to morbidly obese. Groups differed on only two variables: controls were older (M = 30.1 vs. M = 27.3 intervention, p = 0.018) and enrolled earlier in their gestation (M = 13.7 vs. 16.5 weeks intervention, p = 0.019).

Objective One: Intervention Feasibility

Acceptance: Intervention participants had higher CSQ-8 scores (M = 3.59, SD = 0.3 vs. control M = 3.22, SD = 0.7), but not significantly. Intervention participants valued regular and personalized contacts through CHW texts and phone calls (e.g., "...personalized text messages were very helpful and I learned so much", "I liked how easy it was and to be reminded [about appointments and my health] every week").

Study enrollment

Enrollment was hindered by delays following referral. It generally required 2-3 days for the CHW to make successful phone contact and schedule the home visit for consent and enrollment. The delays were mostly due to incorrect phone numbers or disconnected phones. When home visits were successfully scheduled, it was not uncommon for patients to have forgotten the appointment (and request rescheduling) or to be no-shows. None of the referred minors agreed to obtain parental consent and thus, could not be enrolled in the study. A few of the undocumented Hispanic and Sudanese referrals could not be enrolled due to reading barriers.

We had study attrition (N = 77/114; n = 40/68 intervention, n = 37/46 control) due to missing birth outcome data. Many participants moved or relocated without notification; however, attrition did not bias statistical findings. Study completers (i.e., participants with birth outcome data) did not differ in attrition from non-completers (i.e., participants with missing data) (control M = 21.3%; intervention M = 23.1%) (p = 0.83). Completers and non-completers were similar on 12 of 15 demographic variables with the exception of insurance (non-completers were significantly more likely to be uninsured or on government insurance (p = 0.009); risk behaviors (non-completers were significantly more likely to smoke (p = 0.013); and race/ethnicity (non-completers were significantly more likely to be Hispanic/Latino (p = 0.006).

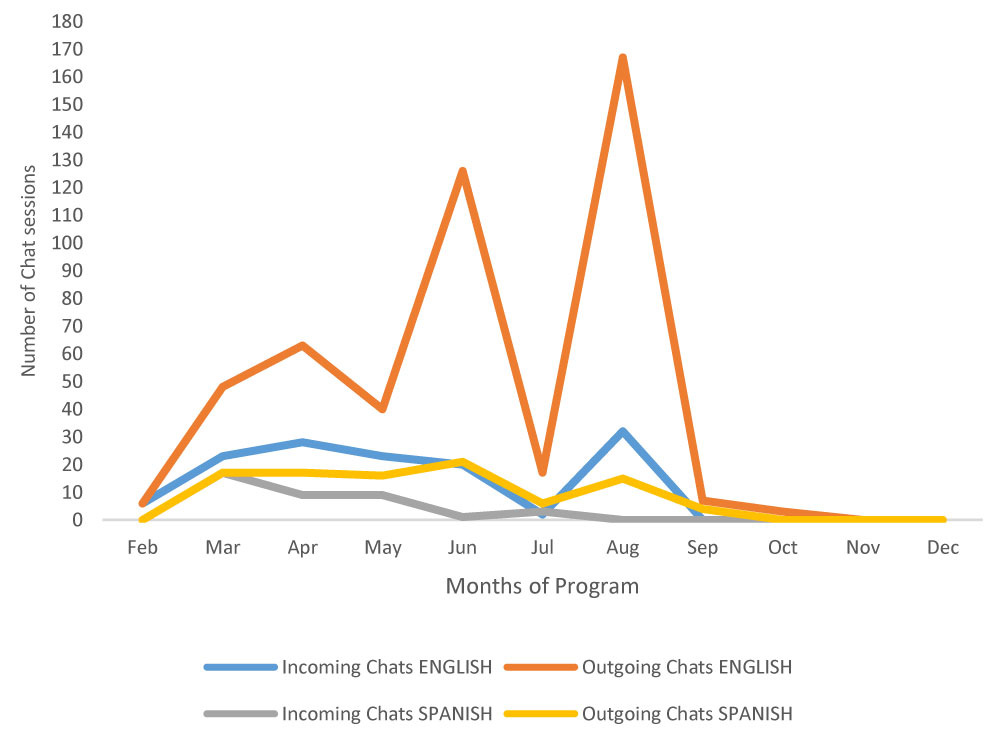

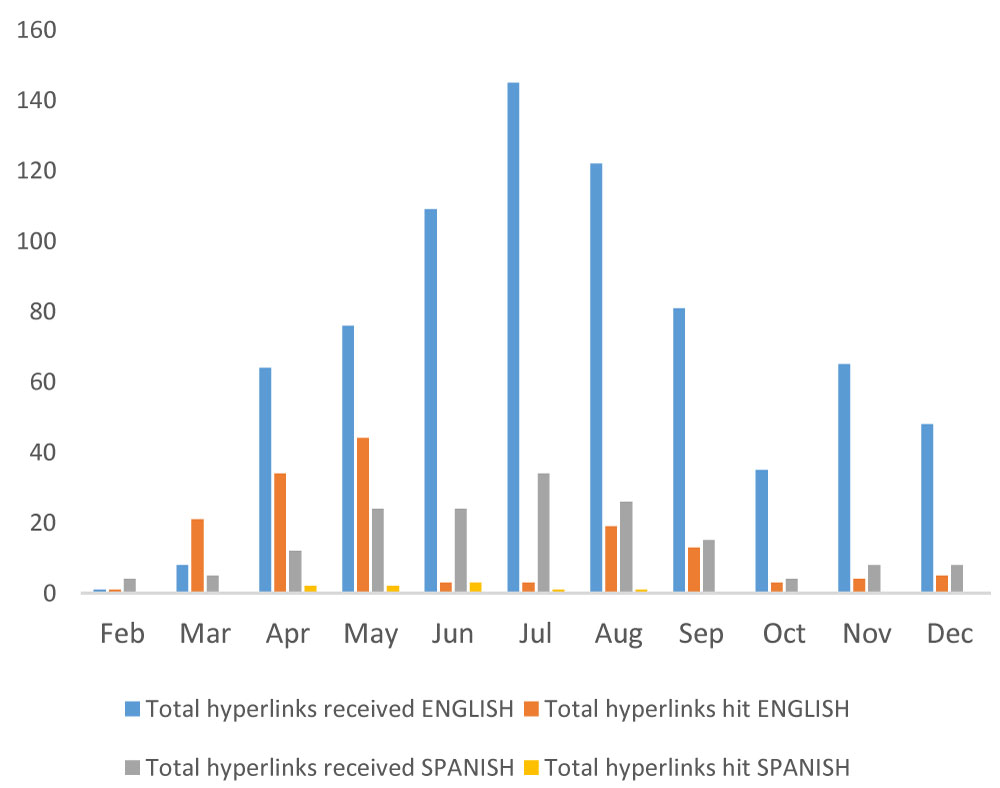

Fidelity

The intervention participants had a large percentage of hyperlink hits and chats especially during the first months after enrollment (Figure 2 and Figure 3), with English-only speaking participants were likely to hit the hyperlinks. The CHW had frequent telephone calls (n = 210) with the 36 participants and the majority (N = 203 calls) involved medical appointment reminders, preparing participants for office visits, and referrals for social support services. The average number of calls was 5.83 per participant, with a range of 1 – 10 per participant.

Objective Two: Preliminary Intervention Efficacy

Birth outcomes

Intervention participants had more full term deliveries, longer gestation births, and more normal birth weight infants than did the controls but the differences were not statistically significant (Table 1).

Patient activation

Both groups increased scores (p < 0.001), with intervention participants showing a higher increase in PAM scores, but not-significantly (Table 1). Individual item analysis of the PAM showed the biggest improvements for intervention participants occurred in confidence regarding 1) Knowing when to appropriately seek medical care (item 5) and 2) Having the ability to figure out solutions when new problems arise in their health (item 12).

Adherence to medical care

The intervention participants had fewer kept appointments but not significantly (Table 1).

Objective Three: Financial Analysis

Financial analyses was from the providers' perspective. Program costs included technology service, CHW, and provision of a smart phone. Per member per month (PMPM) cost of the technology varied by the enabled features of the service and size of the user base. For this study, we used a PMPM of $3.00, which included the ability to chat within the technology app. The CHW received two weeks of training and allocated 0.3 FTE of time toward patient management. Based on CHW compensation, we calculated these costs to be $251.25 per intervention participant. The average cost of providing a smart phone to participants was $20 per month.

Return on investment (ROI) analysis was stratified by two plausible options. Option A assumed the only intervention cost was mobile technology calculated at $27 per participant. Option B incorporated CHW training and patient management costs and mobile technology service cost ($251.25 per participant). It should be noted that the intervention does not change under each option; only the cost items to be counted toward the intervention relative to usual care change. For example, Option A implicitly assumes that the intervention group will receive CHW support, but this is not included as a separate cost item in Option A because the provider may not need to allocate new FTE for a CHW to carry out the intervention. In contrast, Option B assumes that the provider will need to hire or re-allocate new FTE for a CHW to undergo the training and patient management activities. ROI reports the anticipated benefits (in dollars) net of program cost relative to program cost. We based the program's monetary benefit on inpatient treatment cost savings between the intervention and control groups. Inpatient costs were estimated using charges collected from hospital partners. The minimum acceptable ROI is determined by the expected ROI from the next best alternative use for program funds (i.e., the opportunity cost rate of funding the program). For this analysis, we assumed an opportunity cost rate of 5%; however, in practice, this rate will vary across organizations.

We calculated average differences in inpatient costs between intervention and control groups in addition to cost differences after adjusting for outliers or selected patient characteristics. (NOTE: The intervention group had more C-Sections [n = 13 vs. n = 9 for control and an infant admitted to intensive care for three days). Excluding outlier patients (highest 5% of charges), the average difference in costs was $1,547 between intervention and control groups. This decreased to $834 after adjusting for age, primary payer (Medicaid, commercial insurance, self-pay), and C-section operation. Therefore, we estimated ROI on the basis of this range of cost savings, (i.e., $834 to $1,547). This range provides information on the sensitivity of ROI to differences in program healthcare cost savings. Because charges may vary across hospital providers, we also examined cost savings for a single delivery site and found a 20.6% lower average cost for intervention participants than control participants. However, results were not statistically significant due to low sample sizes in stratified analyses.

Table 2 shows that returns are highest for Option A because of the low PMPM cost of providing mobile technology service with chat enabled. However, relative to an opportunity cost rate of 5%, the program is cost-effective under both options.

Discussion

Pilot results showed that concierge mobile technology with CHW support can promote prenatal self-care among rural women. Intervention satisfaction and fidelity were high, and the intervention appears to be cost-effective. Patient activation was improved, indicating that the intervention can enhance self-care confidence and behaviors through improved knowledge. This is consistent with recent research showing mobile phones to be an emerging health technology that can positively modify health behaviors [8,10,32].

Our feasibility findings contribute to a better understanding of intervention delivery and sampling issues, as well as the effectiveness of the intervention among rural populations-all of which are essential for larger scale studies [23,26]. Enrollment challenges can be addressed for a larger study by having the CHW meet with eligible patients at the clinic immediately following their visit. Office nurses said they were too busy and needed quicker turnover on rooms, which precluded them from doing consent and enrollment. Thus, having a separate space at the office so that the CHW could consent and enroll would improve the process. This may also help in recruiting a more at-risk population (e.g., undocumented women, uninsured, single mothers) who can be difficult to reach. Attrition due to missing data was another challenge. We lost contact with a large percentage of our enrolled patients, and believe this was related to the transient nature of the sample, and the use of a dedicated smart phones for the study. Non-completers (i.e., those with missing data) were higher risk (i.e., Hispanic, without insurance or on government insurance, and smokers), and we lost contact with this group because they moved, changed providers, or stopped answering their dedicated phones. A larger study would only need to provide smart phones for those who need one. Indeed, we learned during the course of the study that 86% of our sample already owned a smart phone. Requiring them to carry two phones (i.e., theirs and a study phone) was cumbersome and many reported forgetting to carry it and/or check messages. The loss of five study phones was associated with attrition. Finally, we learned at study conclusion that some of our clinic partners began implementing more diligent follow-up protocols with all of their patients-many of whom included our control participants. This was a positive practice change for the clinics, but had unintended results for the study and most likely for the control group, which was recruited last.

Additional research is needed with a more at-risk population (i.e., minors, undocumented women) who can benefit from the intervention and a larger sample to draw conclusions about intervention effectiveness and financial implications as compared to usual prenatal care. It is especially important to examine patient activation as an outcome because improved PAM scores are a reliable predictor of health care service utilization and health behaviors up to four years [33-35]. Indeed, the strongest relationship between age and activation improvements is for those under 40 years of age [34].

Acknowledgments

This study was supported by a grant from Blue Cross Blue Shield Nebraska, Fund for Health Quality. The project was also supported by the National Institute of General Medical Sciences, 1U54GM115458-01. The content is solely the responsibility of the authors and does not necessarily represent the official views of the NIH.

The authors wish to acknowledge the Central Nebraska Prenatal Advisory Board members for their collaboration on this community-based participatory pilot study by assisting with data collection, analysis, and dissemination.

References

- Centers for Disease Control (2016) Premature birth.

- American College of Obstetrics and Gynecology (2013) Preterm (premature) labor and birth.

- March of Dimes (2016) 2016 premature birth report cards.

- March of Dimes (2008) The costs of prematurity to U.S. employers.

- March of Dimes (2017) Long-term health effects of premature birth.

- National Conference of State Legislatures (2016) Meeting the primary care needs of rural america: Examining the role of non-physician providers.

- Van Vleet A, Paradise J (2016) Kaiser family foundation: Tapping nurse practitioners to meet rising demand for primary care.

- Abroms LC, Whittaker R, Free C, et al. (2015) Developing and pretesting a text messaging program for health behavior change: Recommended steps. JMIR mHealth and uHealth 3: e107.

- Rosenthal E, Wiggins N, Brownstein J, et al. (1998) The final report of the national community health advisor study.

- Singh K, Drouin K, Newmark L, et al. (2016) Developing a framework for evaluating the patient engagement, quality, and safety of mobile health applications. Commonwealth fund 5: 1-11.

- Thirumurthy H, Lester R (2012) M-health for health behaviour change in resource-limited settings: Applications to HIV care and beyond. Bull World Health Organ 90: 390-392.

- Zach L, Dalrymple PW, Rogers ML, et al. (2011) Assessing internet access and use in a medically underserved population: Implications for providing enhanced health information services. Health Info Libr J 29: 61-71.

- Gazmararian JA, Elon L, Yang B, et al. (2014) Text4baby program: An opportunity to reach underserved pregnant and postpartum women. Matern Child Health J 18: 223-232.

- Green MK, Dalrymple PW, Turner KH, et al. (2013) An enhanced text4baby program: Capturing teachable moments throughout pregnancy. J Pediatr Nurs 28: 92-94.

- Pew Research Center (2016) Mobile fact sheet.

- Sterling G (2016) Smartphone ownership just shy of 80 percent.

- Smith A (2015b) U.S. smartphone use in 2015, pew research center.

- Smith A (2015a) Chapter one: A portraint of smartphone ownership.

- American Public Health Association (2016) Community health workers and the american public health association.

- Hostetter J, Klein S (2015) Integrating community health workers into care teams.

- Kunz S, Ingram M, Piper R, et al. (2017) Rural collaborative model for diabetes prevention and management: A case study. Health Promotion Practice.

- Bandura A (2004) Swimming against the mainstream: The early years from chilly tributary to transformative mainstream. Behav Res Ther 42: 613-630.

- Conn VS, Algase DL, Rawl SM, et al. (2010) Publishing pilot intervention work. Western Journal of Nursing Research 32: 994-1010.

- Eldridge SM, Chan CL, Campbell MJ, et al. (2016) CONSORT 2010 statement: Extension to randomised pilot and feasibility trials. BMJ 355: i5239.

- Leon AC, Davis LL, Kraemer HC (2011) The role and interpretation of pilot studies in clinical research. J Psychiatr Res 45: 626-629.

- Thabane L, Ma J, Chu R, et al. (2010) A tutorial on pilot studies: The what, why and how. BMC Med Res Methodol 10: 1.

- Attkisson C, Greenfield TK (1996) The client satisfaction questionnaire (CSQ) scales and the service satisfaction scale-30 (SSS-30). In: Sederer L L, Dickey B, Outcome assessment in clinical practic, 120-127.

- Hibbard JH, Stockard J, Mahoney ER, et al. (2004) Development of the patient activation measure (PAM): Conceptualizing and measuring activation in patients and consumers. Health Services Research 39: 1005-1026.

- Howard G, Ralph K, Gulanick N, et al. (1979) Internal invalidity in pretest-posttest self-report evaluations and a re-evaluation of retrospective pretests. Applied Psychological Measurement 3: 1-23.

- Pratt C, McGuigan W, Katzev A (2000) Measuring program outcomes: Using retrospective pretest methodology. American Journal of Evaluation 21: 341-350.

- Sihthorp J, Paisley K, Gookin J, et al. (2007) Addressing response-shift bias: Retrospective pretests in recreation research and evaluation. Journal of Leisure Research 39: 295-315.

- Muench F, Baumel A (2017) More than a text message: Dismantling digital triggers to curate behavior change in patient-centered health interventions. J Med Internet Res 19: e147.

- Greene J, Hibbard JH (2012) Why does patient activation matter? an examination of the relationships between patient activation and health-related outcomes. J Gen Intern Med 27: 530-526.

- Hibbard JH, Greene J, Shi Y, et al. (2015) Taking the long view: How well do patient activation scores predict outcomes four years later? Med Care Res Rev 72: 324-337.

- Hibbard JH, Greene J, Sacks R, et al. (2016) Adding a measure of patient self-management capability to risk assessment can improve prediction of hgihg costs. Health Affairs 35: 489-494.

Corresponding Author

Mary E Cramer, PhD, RN, FAAN, Professor, University of Nebraska Medical Center College of Nursing, 4101 Dewey Avenue, Omaha, NE, 68198, USA, Tel: +1(402)-559-6617.

Copyright

© 2019 Cramer ME, et al. This is an open-access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.